10 minutes

Prometheus - Auto-deploying Consul and Exporters using Saltstack Part 3: OpenBSD

This is the third part in my ongoing series on using SaltStack to deploy Consul and Prometheus Exporters, enabling Prometheus to discover machines and services to monitor. You can view the other posts in the series below: -

All of the states (as well as those for future posts, if you want a quick preview) are available in my Salt Lab repository.

Why OpenBSD?

If you have visited this site before, you may know that I am a fan of OpenBSD. OpenBSD is built with security as its primary focus, with a base of applications than cover everything from high availability firewalling, network routers, SMTP servers and much more.

The developers also consider a lack of, or hard to understand documentation a bug. This means that the man pages, as well as example configuration files (in /etc/examples) are some of the most comprehensive and clear documentation you’ll find on just about any operating system out there.

It is possible to configure an OpenBSD machine as a network router using only the documentation provided with the system itself. This has the advantage that if you are in a remote location with no access to the internet, there is enough information and examples in the base install to get it up and running.

Configuring OpenBSD

The OpenBSD FAQ will cover most of what you need to install OpenBSD and basic configuration tasks.

To configure a static IP in our management subnet, all we need to do is create a file called /etc/hostname.$INTERFACE_NAME (vio1 in our case) and add the following: -

inet 10.15.31.23 255.255.255.0

You can then bring up the interface using doas sh /etc/netstart vio1 (doas(1) being the privilege escalation utility in OpenBSD).

Installing the Salt Minion

To install the Salt Minion in OpenBSD, you can use the pkg_add(1) utility. pkg_add(1) is OpenBSDs package manager, installing packages from their ports(7) tree.

# Ensure the package exists

$ pkg_info -Q salt

salt-2018.3.3p4

# Install the package

$ doas pkg_add salt

After this, the Salt Minion will now be installed.

Configuring the Salt Minion

As with Linux and Windows, Salt has an included minion configuration file. We replace the contents with the below: -

master: salt-master.yetiops.lab

id: openbsd-salt-01.yetiops.lab

nodename: openbsd-salt-01

The reason I have used the hostname openbsd-salt-01 rather than just openbsd-01 is because I already have a host with that name in my lab (as part of my Ansible for Networking series).

Enable and restart the Salt Minion with: -

$ doas rcctl enable salt_minion

$ doas rcctl start salt_minion

salt_minion(ok)

You should now see this host attempt to register with the Salt Master: -

$ sudo salt-key -L

Accepted Keys:

alpine-01.yetiops.lab

arch-01.yetiops.lab

centos-01.yetiops.lab

salt-master.yetiops.lab

suse-01.yetiops.lab

ubuntu-01.yetiops.lab

void-01.yetiops.lab

win2019-01.yetiops.lab

Denied Keys:

Unaccepted Keys:

openbsd-salt-01.yetiops.lab

Rejected Keys:

Accept the key with salt-key -a 'openbsd-salt-01*'. Once this is done, you should now be able to manage the machine using Salt: -

$ sudo salt 'openbsd*' test.ping

openbsd-salt-01.yetiops.lab:

True

$ sudo salt 'openbsd*' grains.item os

openbsd-salt-01.yetiops.lab:

----------

os:

OpenBSD

Salt States

We use two sets of states to deploy to OpenBSD. The first deploys Consul, the other deploys the Prometheus Node Exporter.

Applying Salt States

Once you have configured the states detailed below, use one of the following options to deploy the changes to the OpenBSD machine: -

salt '*' state.highstatefrom the Salt server (to configure every machine and every state)salt 'openbsd*' state.highstatefrom the Salt server (to configure all machines with a name beginning withopenbsd*, applying all states)salt 'openbsd*' state.apply consulfrom the Salt server (to configure all machines with a name beginning withopenbsd*, applying only theconsulstate)salt-call state.highstatefrom a machine running the Salt agent (to configure just one machine with all states)salt-call state.apply consulfrom a machine running the Salt agent (to configure just one machine with only theconsulstate)

You can also use the salt -C option to apply based upon grains, pillars or other types of matches. For example, to apply to all machines running an OpenBSD kernel, you could run salt -C 'G@kernel:OpenBSD' state.highstate.

Consul - Deployment

The following Salt state is used to deploy Consul onto an OpenBSD host: -

/srv/salt/states/consul/openbsd.sls

consul_package:

pkg.installed:

- pkgs:

- consul

/opt/consul:

file.directory:

- user: _consul

- group: _consul

- mode: 755

- makedirs: True

/etc/consul.d:

file.directory:

- user: _consul

- group: _consul

- mode: 755

- makedirs: True

/etc/consul.d/consul.hcl:

file.managed:

{% if pillar['consul'] is defined %}

{% if pillar['consul']['server'] is defined %}

- source: salt://consul/server/files/consul.hcl.j2

{% else %}

- source: salt://consul/client/files/consul.hcl.j2

{% endif %}

{% endif %}

- user: _consul

- group: _consul

- mode: 0640

- template: jinja

consul_service:

service.running:

- name: consul

- enable: True

- reload: True

- watch:

- file: /etc/consul.d/consul.hcl

{% if pillar['consul'] is defined %}

{% if pillar['consul']['prometheus_services'] is defined %}

{% for service in pillar['consul']['prometheus_services'] %}

/etc/consul.d/{{ service }}.hcl:

file.managed:

- source: salt://consul/services/files/{{ service }}.hcl

- user: _consul

- group: _consul

- mode: 0640

- template: jinja

consul_reload_{{ service }}:

cmd.run:

- name: consul reload

- watch:

- file: /etc/consul.d/{{ service }}.hcl

{% endfor %}

{% endif %}

{% endif %}

This state is very similar to the Linux states. The main difference is that because Hashicorp do not provide a Consul binary for OpenBSD, we install it from the OpenBSD package repositories (which uses pkg_add(1)).

You may notice that the users and groups are prefixed with an underscore (_). This is a naming convention that means the user is unprivileged, meaning they should not be able to perform the same kind of tasks that a normal user could.

This state is applied to OpenBSD machines as such: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

- exporters.node_exporter.systemd

'os:Alpine':

- match: grain

- consul.alpine

- exporters.node_exporter.alpine

'os:Void':

- match: grain

- consul.void

- exporters.node_exporter.void

'kernel:OpenBSD':

- match: grain

- consul.openbsd

'kernel:Windows':

- match: grain

- consul.windows

- exporters.windows_exporter.win_exporter

- exporters.windows_exporter.windows_exporter

We match the kernel grain, ensuring the value is OpenBSD: -

$ salt 'openbsd*' grains.item kernel

openbsd-salt-01.yetiops.lab:

----------

kernel:

OpenBSD

Pillars

We use the same pillars for OpenBSD as we do for Linux: -

consul.sls

consul:

data_dir: /opt/consul

prometheus_services:

- node_exporter

consul-dc.sls

consul:

dc: yetiops

enc_key: ###CONSUL_KEY###

servers:

- salt-master.yetiops.lab

These pillars reside in /srv/salt/pillars/consul. They are applied as such: -

base:

'*':

- consul.consul-dc

'G@kernel:Linux or G@kernel:OpenBSD':

- match: compound

- consul.consul

'kernel:Windows':

- match: grain

- consul.consul-client-win

'salt-master*':

- consul.consul-server

In the first part of this series, I mentioned: -

The consul.consul pillar (i.e. /srv/salt/pillars/consul/consul.sls) is applied to all of our Linux hosts, as they all have the same data directory and the same prometheus_services.

Currently there is only one grain to match against, so we could have used match: grain. However in later posts in this series, we will match against multiple grains (requiring the compound match).

As we can see above, we are now matching against multiple grains. We now can use the compound match to apply the pillars, rather than repeating the configuration for each different kernel.

Consul - Verification

We can verify that Consul is working with the below: -

$ consul members

Node Address Status Type Build Protocol DC Segment

salt-master 10.15.31.5:8301 alive server 1.7.3 2 yetiops <all>

alpine-01 10.15.31.27:8301 alive client 1.7.3 2 yetiops <default>

arch-01 10.15.31.26:8301 alive client 1.7.3 2 yetiops <default>

centos-01.yetiops.lab 10.15.31.24:8301 alive client 1.7.3 2 yetiops <default>

openbsd-salt-01.yetiops.lab 10.15.31.23:8301 alive client 1.7.2 2 yetiops <default>

suse-01 10.15.31.22:8301 alive client 1.7.3 2 yetiops <default>

ubuntu-01 10.15.31.33:8301 alive client 1.7.3 2 yetiops <default>

void-01 10.15.31.31:8301 alive client 1.7.2 2 yetiops <default>

win2019-01 10.15.31.25:8301 alive client 1.7.2 2 yetiops <default>

$ consul catalog nodes -service=node_exporter

Node ID Address DC

alpine-01 e59eb6fc 10.15.31.27 yetiops

arch-01 97c67201 10.15.31.26 yetiops

centos-01.yetiops.lab 78ac8405 10.15.31.24 yetiops

openbsd-salt-01.yetiops.lab c87bfa18 10.15.31.23 yetiops

salt-master 344fb6f2 10.15.31.5 yetiops

suse-01 d2fdd88a 10.15.31.22 yetiops

ubuntu-01 4544c7ff 10.15.31.33 yetiops

void-01 e99c7e3c 10.15.31.31 yetiops

Node Exporter - Deployment

Now that Consul is up and running, we will install the Prometheus Node Exporter.

States

The following Salt state is used to deploy the Prometheus Node Exporter onto an OpenBSD host: -

/srv/salt/states/exporters/node_exporter/bsd.sls

node_exporter_package:

pkg.installed:

- pkgs:

- node_exporter

node_exporter_service:

service.running:

- name: node_exporter

- enable: true

As you can see, we only have two parts to this. First, we install the node_exporter using OpenBSDs package manager (pkg_add(1)). Afterwards, we enable the node_exporter service. Again, binaries are not released for OpenBSD by the Prometheus project, so we use what is in the ports(7) tree.

This state is applied with the following: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

- exporters.node_exporter.systemd

'os:Alpine':

- match: grain

- consul.alpine

- exporters.node_exporter.alpine

'os:Void':

- match: grain

- consul.void

- exporters.node_exporter.void

'kernel:OpenBSD':

- match: grain

- consul.openbsd

- exporters.node_exporter.bsd

'kernel:Windows':

- match: grain

- consul.windows

- exporters.windows_exporter.win_exporter

- exporters.windows_exporter.windows_exporter

Pillars

There are no pillars in this lab specific to the Node Exporter.

Node Exporter - Verification

After this, we should be able to see the node_exporter running and producing metrics: -

# Check the service is enabled

$ rcctl ls on

check_quotas

consul

cron

library_aslr

node_exporter

ntpd

pf

pflogd

salt_minion

slaacd

smtpd

sndiod

sshd

syslogd

# Check it is listening

$ netstat -an | grep -i 9100

tcp 0 0 10.15.31.23.9100 10.15.31.254.40456 ESTABLISHED

tcp 0 0 *.9100 *.* LISTEN

# Check it responds

$ curl 10.15.31.23:9100/metrics | grep -i uname

node_uname_info{domainname="yetiops.lab",machine="amd64",nodename="openbsd-salt-01",release="6.7",sysname="OpenBSD",version="OpenBSD 6.7 (GENERIC) #179: Thu May 7 11:02:37 MDT 2020 [email protected]:/usr/src/sys/arch/amd64/compile/GENERIC "} 1

All looks good!

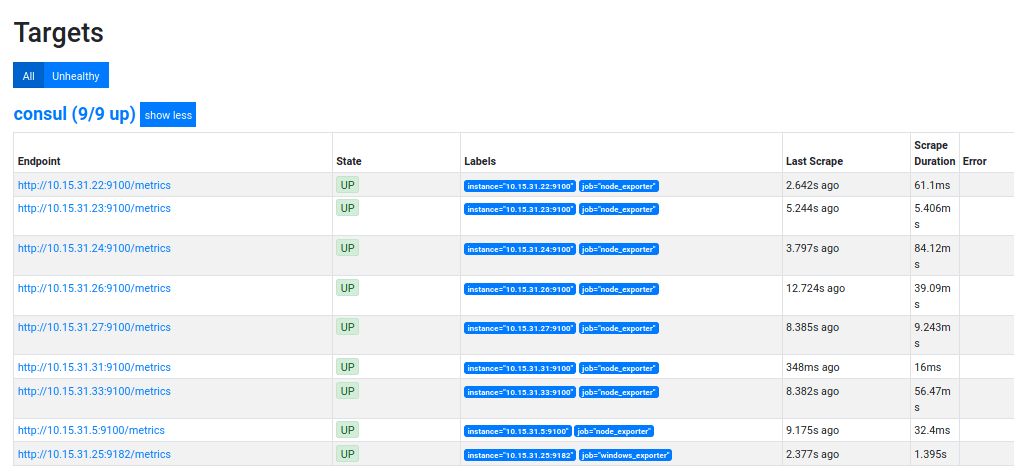

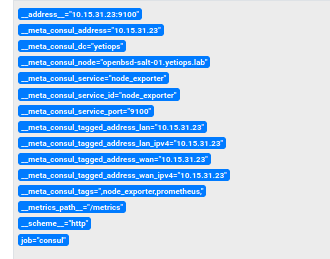

Prometheus Targets

As Prometheus is already set up (see here), and matches on the prometheus tag, we should see this within the Prometheus targets straight away: -

The second target here is the OpenBSD machine (10.15.31.23).

Above is the Metadata we receive from Consul about this host

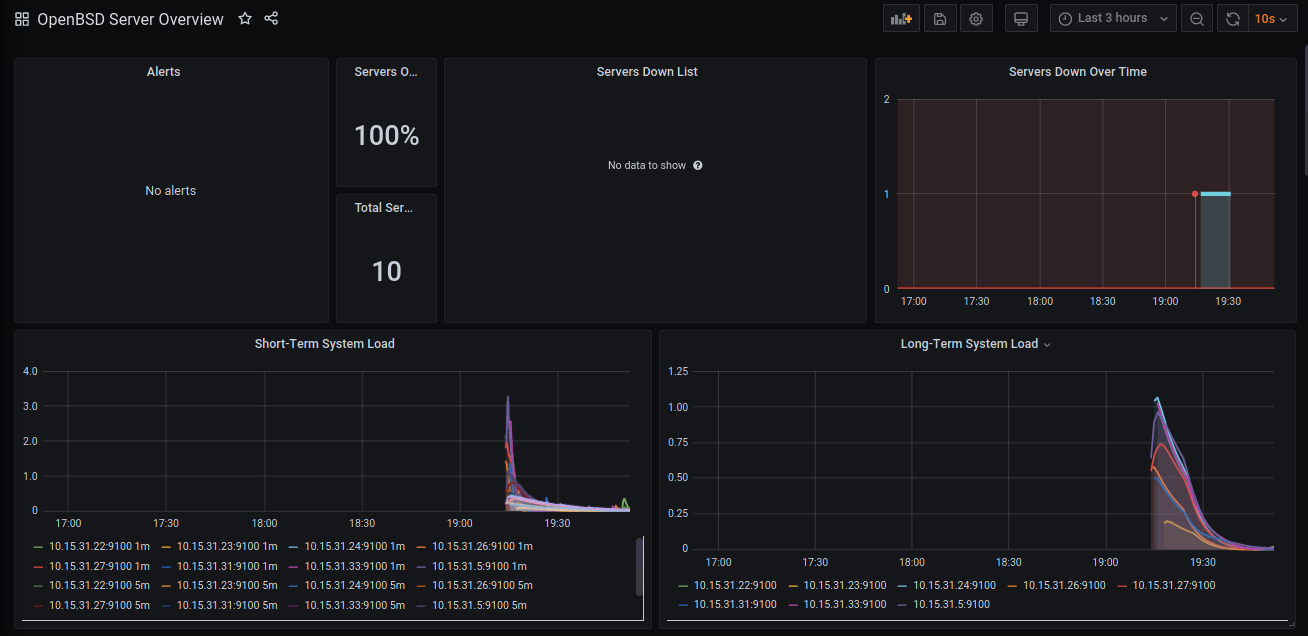

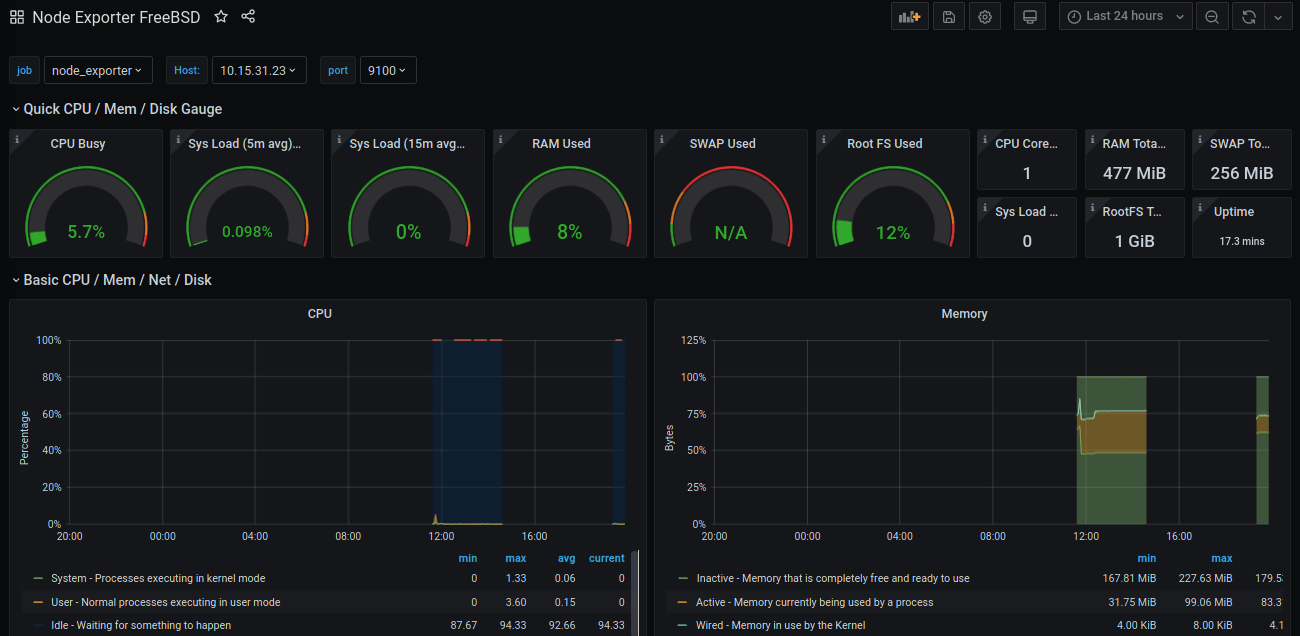

Grafana Dashboards

When I previously covered using the Prometheus Node Exporter with OpenBSD (and FreeBSD), I mentioned that load is calculated differently on BSD-based systems as it is on Linux. In BSD-based systems, load is a measure of CPU usage only, whereas in Linux it also takes into account disk/storage wait times and more.

Because of this, different metrics are exposed by the Node Exporter on OpenBSD. This means that you may find that standard Node Exporter dashboards give unexpected results when targeting OpenBSD.

Instead, you could use the OpenBSD Server Overview dashboard. This dashboard does assume that all hosts that it discovers with the node_exporter are OpenBSD. If you run this in an environment with Linux and other systems, it would require updating it to use variables, or matching labels that indicate that a discovered host is running OpenBSD.

An alternative is the Node Exporter FreeBSD dashboard. While the name indicates it is solely for FreeBSD, because FreeBSD and OpenBSD calculate CPU and memory in similar ways, this dashboard will work for both.

OpenBSD Server Overview

Node Exporter FreeBSD

Summary

Being able to manage OpenBSD in the same way you can manage Linux and Windows ensures consistency across your environment, no matter what role they serve in your infrastructure.

For example, you could use Linux as your primary server operating system, Windows to provide Active Directory, and OpenBSD for your security perimeter. Using something like SaltStack to deploy your configuration across all platforms ensures that your infrastructure policies (domain names, proxies, monitoring thresholds) are consistent, as they all originate from the same source of truth.

Even if you are in an OpenBSD-only environment, you can still benefit from the central management and automatically monitoring new hosts, with the added bonus of needing less state files and pillars to manage!

In the next post in this series, we will cover how you deploy SaltStack on a FreeBSD host, which will then deploy Consul and the Prometheus Node Exporter.

devops monitoring prometheus consul saltstack openbsd

technical prometheus monitoring config management

1950 Words

2020-06-24 23:10 +0000