38 minutes

Prometheus - Auto-deploying Consul and Exporters using Saltstack Part 1: Linux

Prometheus is a monitoring tool that uses a time-series database to store metrics, gathered from multiple endpoints. These endpoints are either applications, or agents running alongside the applications themselves. These agents are known as exporters. There are exporters for everything from AWS Cloudwatch to Plex.

If you are a regular visitor to this site, you will have seen posts on Prometheus before. One of the posts covered monitoring OpenWRT, Windows, OpenBSD and FreeBSD. Since then, I have started to look into monitoring other systems, like illumos-based and others.

I also wrote a post on how to use Consul to discover hosts and the services running on them, so that Prometheus can monitor them.

In addition, I have written about using SaltStack for configuration management of different operating systems.

New Series

As I am now in the process of deploying Consul and Prometheus across my workplace’s infrastructure using SaltStack, I have decided to share what I have learned in a new series. This will cover deploying across the following systems: -

- Linux (SystemD-based, as well as Alpine and Void Linux)

- OpenBSD

- FreeBSD

- Windows

- illumos (specifically OmniOS)

- MacOS

This first post will cover how to deploy SaltStack, Consul and the Prometheus Node Exporter on Linux.

You can view the other posts in the series below: -

Setting up your SaltStack environment

In my post Configuration Seasoning: Getting started with Saltstack, I covered how to setup a Salt “master” (the central Salt server that agents connect to), version control of your Salt states and pillars, and also how to use it across teams (use file-system ACLs).

The salt-master in my lab is set up exactly as per the above article (I even followed my own tutorial to create it!). From here, I will refer to it as the Salt central server.

Every machine in this lab is running on a Dell Optiplex 3020, running Ubuntu 20.04, using KVM for the virtual machines. Each machine has two network interfaces: -

- Primary - NAT to the internet for obtaining packages/updates

- Subnet -

192.168.122.0/24

- Subnet -

- Management - All communication between the machines (including between Salt agents and the central Salt server)

- Subnet -

10.15.31.0/24

- Subnet -

Deploying to Linux

This post covers how to install the salt-minion (the Salt agent that runs on a host), consul and node_exporter on Linux. The Salt central server will also act as a Consul server rather than a client, so that each other Consul clients can register to it. In a production environment, it is advisable to have multiple dedicated Consul servers.

Init systems

Most Linux operating systems now use SystemD for initialization (init) and managing services, but there are some exceptions. Below lists the operating systems that are included in this lab, and what init system they use: -

| OS | init |

|---|---|

| Alpine Linux | openrc |

| Arch Linux | systemd (by default) |

| CentOS | systemd (by default) |

| Debian | systemd (by default) |

| OpenSUSE | systemd (by default) |

| Ubuntu | systemd (by default) |

| Void Linux | runit |

The reasons for including Alpine is that it is very useful for smaller/appliance-style applications, and it is very popular as a container base image. I included Void Linux mainly out of curiosity!

Installing the Salt Minion

There are four main methods for installing the Salt Minion (the agent): -

- Install from the distribution’s package repositories

- This may not be the most up to date version

- Add Salt’s package repository to your operating system (if available)

- Latest version, but may not be packaged for your chosen distribution

- Installing using PyPi (Python’s package/module repository)

- Install from source

At the time of writing, Salt (version 3000) has issues with Python 3.8. Alpine, Ubuntu and Void all come with Python 3.8. This does appear to be resolved in Salt version 3001, but it is in RC (Release Candidate) status currently rather than GA (General Availability).

The RC version is available via a separate package repository if you wish to install it. Alternatively, if you clone the Salt GitHub repository and build it, it will include the latest fixes (including Python 3.8 support).

Salt 3001 is scheduled for generally availability in late June 2020. By the time you read this post, the latest version may already support Python 3.8 (and therefore Alpine, Ubuntu and Void).

Installing from the distribution’s package repositories

Below is a list for how to install Salt from each distribution’s package repository

| OS | Command to install |

|---|---|

| Alpine Linux | apk add salt-minion salt-minion-openrc |

| Arch Linux | pacman -S salt |

| CentOS 7 and below | yum install salt-minion |

| CentOS 8 and above | dnf install salt-minion |

| Debian | apt install salt-minion |

| OpenSUSE | zypper install salt-minion |

| Ubuntu | apt install salt-minion |

| Void | xbps-install salt |

The version in the Ubuntu repositories is the Python 2 version, meaning that it can still run despite Ubuntu’s default Python interpreter being Python 3.8. However Python 2 is now end-of-life. You would also be without the latest security updates for Salt too.

At the time of writing (early June 2020), the Alpine packages will install, but will not run (due to Python 3.8). Void’s package does not install due to conflicts with libcrypto, libssl, libressl and libtls. For both Alpine and Void, the best options currently are to install from Python’s PyPI or build from source.

Add Salt’s package repository

Salt provides package repositories for the following OSs: -

- Debian

- Red Hat/CentOS

- Ubuntu

- SUSE

- Fedora

- Amazon Linux

- Raspbian/Raspberry Pi OS

These can be found at the SaltStack Package Repo page with full instructions adding the repository, and installing the relevant packages.

With Ubuntu, due to the Python 3.8 incompatibility, you can add the SaltStack Release Candidate repository. Please note as it is an RC version, you run it at your own risk.

Installing using PyPI

PyPI, or the Python Package Index, is effectively the package manager for Python. For those distributions without Salt in their package repositories, and no official SaltStack repository available, you can make use of PyPI. Most, if not all Linux distributions ship with Python support, many now defaulting to Python 3.

If you are familiar with Python, or at least installing packages, you will have likely used the pip tool, which installs packages/modules from PyPI. Instructions for installing pip will depend upon your distribution (this covers most common distributions).

After pip is installed, you can then install the Salt minion with the following: -

pip install salt

In some distributions, you may need to use pip3 rather than pip to ensure that the version installed uses Python 3.

As mentioned previously Python 2 is now end-of-life, so you will want to use the Python 3 version in nearly all cases. The only time to consider using the Python 2 version would be if the Python 3 version has conflicts with other packages on your system. In my lab, this was only necessary for Void Linux, however I believe future versions of Salt (i.e. 3001) may address this anyway.

Install from source

To install from source, you will need to do the following: -

git clone https://github.com/saltstack/salt

cd salt

sudo python setup.py install --force

After this, you will need to add Init scripts (i.e. SystemD units, runit SV files, OpenRC service files), examples of which can be found here

Configuring the minion

To configure the minion, it needs to know what Salt server (the “master”) to register with. Additionally, I also configure the id (the host’s full hostname) and the nodename (the hostname without the full domain suffix): -

master: salt-master.yetiops.lab

id: alpine-01.yetiops.lab

nodename: alpine-01

The configuration on all the machines is identical, other than changing the ID and nodename to match the host.

This configuration goes into the /etc/salt/minion file. You can either replace the contents of this file with the above, or append it to the end.

After this, restart and enable your salt-minion service: -

- SystemD

- Restart -

systemctl restart salt-minion - Enable -

systemctl enable salt-minion

- Restart -

- OpenRC (Alpine)

- Restart -

rc-service salt-minion restart - Enable -

rc-update add salt-minion default

- Restart -

- Runit (Void)

- Restart -

sv restart salt-minion - Enable -

ln -s /run/runit/supervise.salt-minion /etc/sv/salt-minion/supervise

- Restart -

Accept the minions on the Salt server

To accept the minions on the Salt server run salt-key -a 'HOSTNAME*', replacing HOSTNAME with the agent’s hostname (e.g. ubuntu-01). After this, you should see all of your hosts in salt-key -L: -

$ sudo salt-key -L

Accepted Keys:

alpine-01.yetiops.lab

arch-01.yetiops.lab

centos-01.yetiops.lab

salt-master.yetiops.lab

suse-01.yetiops.lab

ubuntu-01.yetiops.lab

void-01.yetiops.lab

Denied Keys:

Unaccepted Keys:

Rejected Keys:

Once this is done, you can build your state files and pillars, ready to deploy Consul and node_exporter.

Salt States

In my lab environment, I have three different groups of state files for Linux. One of them covers all distributions using SystemD. The other two are for Alpine and Void specifically.

Consul - SystemD

States

The following Salt state is used to deploy Consul onto a SystemD-based host: -

/srv/salt/states/consul/init.sls

consul_binary:

archive.extracted:

- name: /usr/local/bin

- source: https://releases.hashicorp.com/consul/1.7.3/consul_1.7.3_linux_amd64.zip

- source_hash: sha256=453814aa5d0c2bc1f8843b7985f2a101976433db3e6c0c81782a3c21dd3f9ac3

- enforce_toplevel: false

- user: root

- group: root

- if_missing: /usr/local/bin/consul

consul_user:

user.present:

- name: consul

- fullname: Consul

- shell: /bin/false

- home: /etc/consul.d

consul_group:

group.present:

- name: consul

/etc/systemd/system/consul.service:

file.managed:

{% if pillar['consul'] is defined %}

{% if 'server' in pillar['consul'] %}

- source: salt://consul/server/files/consul.service.j2

{% else %}

- source: salt://consul/client/files/consul.service.j2

{% endif %}

{% endif %}

- user: root

- group: root

- mode: 0644

- template: jinja

/opt/consul:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d/consul.hcl:

file.managed:

{% if pillar['consul'] is defined %}

{% if pillar['consul']['server'] is defined %}

- source: salt://consul/server/files/consul.hcl.j2

{% else %}

- source: salt://consul/client/files/consul.hcl.j2

{% endif %}

{% endif %}

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_service:

service.running:

- name: consul

- enable: True

- reload: True

- watch:

- file: /etc/consul.d/consul.hcl

{% if pillar['consul'] is defined %}

{% if pillar['consul']['prometheus_services'] is defined %}

{% for service in pillar['consul']['prometheus_services'] %}

/etc/consul.d/{{ service }}.hcl:

file.managed:

- source: salt://consul/services/files/{{ service }}.hcl

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_reload_{{ service }}:

cmd.run:

- name: consul reload

- watch:

- file: /etc/consul.d/{{ service }}.hcl

{% endfor %}

{% endif %}

{% endif %}

If you are used to Ansible, then this Salt state isn’t too dissimilar than the equivalent Ansible playbook. One useful feature of Salt is being able to use Jinja2 looping and conditional syntax to repeat a task (compared to using loop, when and/or with_ in Ansible).

Writing the equivalent of the above in Ansible would likely require either duplicate tasks for the Consul server and client, or referencing maps/dictionaries and variables to get the right files. With Salt, you can generate the parameters of a task (and potentially the tasks themselves) conditionally.

To explain each part of this: -

consul_binary:

archive.extracted:

- name: /usr/local/bin

- source: https://releases.hashicorp.com/consul/1.7.3/consul_1.7.3_linux_amd64.zip

- source_hash: sha256=453814aa5d0c2bc1f8843b7985f2a101976433db3e6c0c81782a3c21dd3f9ac3

- enforce_toplevel: false

- user: root

- group: root

- if_missing: /usr/local/bin/consul

This downloads the Consul package (version 1.7.3) and extracts the contents to /usr/local/bin. We use the source_hash to verify the file matches the hash provided by Hashicorp themselves. We set enforce_toplevel to false so that we don’t create a /usr/local/bin/consul_1.7.3_linux_amd64 directory. The if_missing says that we only do this if /usr/local/bin/consul doesn’t exist (i.e. the Consul binary).

If you need to install a new version of Consul at a later date, you can remove the if_missing part during upgrade. I would advise leaving it in until then so that you are not downloading the Consul binary every time you run your Salt states.

consul_user:

user.present:

- name: consul

- fullname: Consul

- shell: /bin/false

- home: /etc/consul.d

consul_group:

group.present:

- name: consul

The above tasks ensure a consul user exists (rather than running as root or another user) and a group also called consul. In most cases, creating the consul user is enough to also create the consul group, but I found then in OpenSUSE this is not the case. Given the group should exist anyway, checking for it isn’t an issue.

The group.present state also ensures it is created, if it doesn’t exist already.

/etc/systemd/system/consul.service:

file.managed:

{% if pillar['consul'] is defined %}

{% if 'server' in pillar['consul'] %}

- source: salt://consul/server/files/consul.service.j2

{% else %}

- source: salt://consul/client/files/consul.service.j2

{% endif %}

{% endif %}

- user: root

- group: root

- mode: 0644

- template: jinja

This state creates a SystemD unit file (/etc/systemd/system/consul.service), with different contents if the machine is a Consul server or a client.

The salt:// path is relative to the base directory of your state files (in my case /srv/salt/states. Therefore the files are in /srv/salt/states/consul/server/files/consul.service.j2 and /srv/salt/states/consul/client/files/consul.service.j2.

The contents of both files are: -

[Unit]

Description="HashiCorp Consul - A service mesh solution"

Documentation=https://www.consul.io/

Requires=network-online.target

After=network-online.target

ConditionFileNotEmpty=/etc/consul.d/consul.hcl

[Service]

Type=notify

User=consul

Group=consul

ExecStart=/usr/local/bin/consul agent -config-dir /etc/consul.d

ExecReload=/usr/local/bin/consul reload

KillMode=process

Restart=on-failure

TimeoutSec=300s

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Both files are identical currently, but it does mean that if you need to add server-specific options you can, without also affecting the clients.

/opt/consul:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

The above states ensures the referenced directories are created. The /opt/consul directory is where Consul stores its data and running state. The /et/consul.d directory is where Consul sources its configuration.

/etc/consul.d/consul.hcl:

file.managed:

{% if pillar['consul'] is defined %}

{% if pillar['consul']['server'] is defined %}

- source: salt://consul/server/files/consul.hcl.j2

{% else %}

- source: salt://consul/client/files/consul.hcl.j2

{% endif %}

{% endif %}

- user: consul

- group: consul

- mode: 0640

- template: jinja

The above state adds /etc/consul.d/consul.hcl to the machine, with the configuration dependent upon whether it is the server or the client. The two files look like the below: -

salt://consul/server/files/consul.hcl.j2

{

{%- for ip in grains['ipv4'] %}

{%- if '10.15.31' in ip %}

"advertise_addr": "{{ ip }}",

"bind_addr": "{{ ip }}",

{%- endif %}

{%- endfor %}

"bootstrap_expect": 1,

"client_addr": "0.0.0.0",

"data_dir": "/opt/consul",

"datacenter": "{{ pillar['consul']['dc'] }}",

"encrypt": "{{ pillar['consul']['enc_key'] }}",

"node_name": "{{ grains['nodename'] }}",

"retry_join": [

{%- for server in pillar['consul']['servers'] %}

"{{ server }}",

{%- endfor %}

],

"server": true,

"autopilot": {

"cleanup_dead_servers": true,

"last_contact_threshold": "200ms",

"max_trailing_logs": 250,

"server_stabilization_time": "10s",

},

"ui": true

}

The first part of this use Salt grains, which are detail about the hosts the Salt agents are running on. If you are familiar with Ansible, you would know these as facts. Consul tries to autodiscover the IP address to listen on and bind to, but sometimes it can pick the wrong one (it could pick a public facing interface rather than a private one for example). In this case, we go through the IPs configured on the hosts, and use whichever starts with 10.15.31. Our management/private range is 10.15.31.0/24, so all hosts should have an IP in this range.

The bootstrap_expect parameter is important. If you set this to 1, you can run a single server Consul cluster (with multiple clients). In production you should never run with a single node. Data loss and state can be lost if your single server encounters problems. It is recommended to run 3 Consul servers or more for quorum. This is because if you start with two nodes, and there is a network partition or other failure, you have no way of knowing which node has the correct state. If however you have 3 (or more), then the “correctness” can be determined based upon 2 (or more) of the nodes agreeing (a majority vote).

The datacenter is your Consul cluster. This doesn’t dictate the physical location, instead being a logical separation between clusters. You could call your datacenter everything from equinix-ld5, preprod, pod4rack2, or meshuggah, so long as the separation is logical to yourself.

The encrypt field uses an encryption key generated by Consul. You can create these by running consul keygen. This command does not replace the existing Consul key of your running cluster, instead just generating a key in the correct format for any Consul cluster.

The retry_join section dictates what server(s) to form a cluster with when Consul comes up.

The most important part is the server: true field. This dictates that this host(s) will become Consul servers. We also enable the ui, so that you can use the Consul web interface to view the state of your cluster.

salt://consul/client/files/consul.hcl.j2

{

{%- for ip in grains['ipv4'] %}

{%- if '10.15.31' in ip %}

"advertise_addr": "{{ ip }}",

"bind_addr": "{{ ip }}",

{%- endif %}

{%- endfor %}

"data_dir": "{{ pillar['consul']['data_dir'] }}",

"datacenter": "{{ pillar['consul']['dc'] }}",

"encrypt": "{{ pillar['consul']['enc_key'] }}",

"node_name": "{{ grains['nodename'] }}",

"retry_join": [

{%- for server in pillar['consul']['servers'] %}

"{{ server }}",

{%- endfor %}

],

"server": false,

}

The client configuration is similar the server configuration, with some parts removed (bootstrap_expect, ui and the autopilot section). We also set "server": false to ensure this node doesn’t become a Consul server.

consul_service:

service.running:

- name: consul

- enable: True

- reload: True

- watch:

- file: /etc/consul.d/consul.hcl

The above task makes sure that the consul service is running and enabled, and also triggers a reload of the service. This only happens if there is a change in the /etc/consul.d/consul.hcl file.

{% if pillar['consul'] is defined %}

{% if pillar['consul']['prometheus_services'] is defined %}

{% for service in pillar['consul']['prometheus_services'] %}

/etc/consul.d/{{ service }}.hcl:

file.managed:

- source: salt://consul/services/files/{{ service }}.hcl

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_reload_{{ service }}:

cmd.run:

- name: consul reload

- watch:

- file: /etc/consul.d/{{ service }}.hcl

{% endfor %}

{% endif %}

{% endif %}

This section goes through a list of services. For every service defined, it places the corresponding $SERVICE.hcl file into the /etc/consul.d/ directory. It also triggers a consul reload after, trigger Consul to reload its configuration (which picks up any configuration files in the /etc/consul.d directory). This reload is only triggered if the contents of the file /etc/consul.d/$SERVICE.hcl have changed (using the watch directive)

For example, if one of our services was node_exporter, we would look for salt://consul/services/files/node_exporter.hcl. We would place this file on the machine (as /etc/consul.d/node_exporter.hcl), and then reload Consul. The contents of the node_exporter.hcl file are: -

{"service":

{"name": "node_exporter",

"tags": ["node_exporter", "prometheus"],

"port": 9100

}

}

For further information on the contents of this file, see my previous post on using Consul to discover services with Prometheus. The tags are used so that Prometheus can discover services specific to it.

Consul is not just for Prometheus, and can be used by many different applications for service discovery. Ensuring services are tagged correctly allows us means we are only monitoring the services that should be monitored, or that expose Prometheus compatible endpoints.

This state is applied as such: -

/srv/salt/states/top.sls

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

If you supply just the name consul as your state, this will tell Salt to the look in the /srv/salt/states/consul/ directory for a file named init.sls. If the filename is different (e.g. void.sls) you would need to use consul.void instead.

Usually you would match hosts in your top.sls file with something like '*.yetiops.lab': or 'arch-01*':. These match against the nodenames or IDs for hosts (and their agents) registered to the Salt server.

Instead, we are using the G@ option to match against grains. In this case, we are saying that if the host is a Linux host (i.e. running the Linux kernel) and has the init system of systemd, then run the specified states against it.

If you are only matching against one grain (i.e. only kernel, or only the init system) you could use match: grain. The match: compound option allows you match multiple grains, or you can match against grains and the hostname, or hostnames and pillars, and much more (full list here).

Because we only want to apply this state against Linux hosts running SystemD, we match multiple grains. You can test what hosts these grains apply to using: -

# Linux and running SystemD

$ salt -C 'G@init:systemd and G@kernel:Linux' test.ping

ubuntu-01.yetiops.lab:

True

arch-01.yetiops.lab:

True

salt-master.yetiops.lab:

True

centos-01.yetiops.lab:

True

suse-01.yetiops.lab:

True

# Linux, any init system

$ salt -C 'G@kernel:Linux' test.ping

salt-master.yetiops.lab:

True

ubuntu-01.yetiops.lab:

True

arch-01.yetiops.lab:

True

suse-01.yetiops.lab:

True

centos-01.yetiops.lab:

True

void-01.yetiops.lab:

True

alpine-01.yetiops.lab:

True

The first command matches only our SystemD machines, whereas the second also includes Alpine and Void (both of which do not run SystemD).

Pillars

The pillars (i.e. the host/group specific variables) are defined as such: -

consul.sls

consul:

data_dir: /opt/consul

prometheus_services:

- node_exporter

consul-dc.sls

consul:

dc: yetiops

enc_key: ###CONSUL_KEY###

servers:

- salt-master.yetiops.lab

consul-server.sls

consul:

server: true

These are all in the /srv/salt/pillars/consul directory. It is worth noting that if you have two sets of pillars that reference the same base key (i.e. all of these start with consul) the variables are merged (rather than one taking set of variables taking precedence over another). This means that you can refer to all of them in your state files and templates using consul.$VARIABLE.

These pillars are applied to the nodes as per below: -

'*':

- consul.consul-dc

'G@kernel:Linux':

- match: compound

- consul.consul

'salt-master*':

- consul.consul-server

All hosts receive the consul-dc pillar, as the variables defined here are common across every host we will configure in this lab.

The consul.consul pillar (i.e. /srv/salt/pillars/consul/consul.sls) is applied to all of our Linux hosts, as they all have the same data directory and the same prometheus_services. Currently there is only one grain to match against, so we could have used match: grain. However in later posts in this series, we will match against multiple grains (requiring the compound match).

Finally, we set the salt-master* as our Consul server, which changes which SystemD files and the Consul configuration are deployed to it (as seen in the states section above).

To view the pillars that a node has, you can run the following: -

$ salt 'ubuntu-01*' pillar.items

ubuntu-01.yetiops.lab:

----------

consul:

----------

data_dir:

/opt/consul

dc:

yetiops

enc_key:

###CONSUL_ENCRYPTION_KEY###

prometheus_services:

- node_exporter

servers:

- salt-master.yetiops.lab

Alternatively, if you just want to see a single pillar, you can use: -

$ salt 'salt-master*' pillar.item consul:server

salt-master.yetiops.lab:

----------

consul:server:

True

$ salt 'ubuntu*' pillar.item consul:server

ubuntu-01.yetiops.lab:

----------

consul:server:

Apply the states

To apply all of the states, you can run either: -

salt '*' state.highstatefrom the Salt server (to configure every machine and every state)salt 'suse*' state.highstatefrom the Salt server (to configure all machines with a name beginning withsuse*, applying all states)salt 'suse*' state.apply consulfrom the Salt server (to configure all machines with a name beginning withsuse*, applying only theconsulstate)salt-call state.highstatefrom a machine running the Salt agent (to configure just one machine with all states)salt-call state.apply consulfrom a machine running the Salt agent (to configure just one machine with only theconsulstate)

You can also use the salt -C option to apply based upon grains, pillars or other types of matches. For example, to apply to all machines running a Debian-derived operating system, you could run salt -C 'G@os_family:Debian' state.highstate which would cover Debian, Ubuntu, Elementary OS, and others that use Debian as their base.

Consul - Alpine Linux

As mentioned already, Alpine does not use SystemD, instead using OpenRC. This is because SystemD has quite a few associated projects, and Alpine tries to keep its base installation as lean as possible. Also, as Alpine is often a base for containers (which typically do not have their own init system), it makes something like SystemD surplus to requirements.

States

The state file for Alpine looks like the below: -

/srv/salt/states/consul/alpine.sls

consul_package:

pkg.installed:

- pkgs:

- consul

- consul-openrc

/etc/init.d/consul:

file.managed:

- source: salt://consul/client/files/consul-rc

- user: root

- group: root

- mode: 755

/etc/consul/server.json:

file.absent

/opt/consul:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d/consul.hcl:

file.managed:

{% if pillar['consul'] is defined %}

{% if pillar['consul']['server'] is defined %}

- source: salt://consul/server/files/consul.hcl.j2

{% else %}

- source: salt://consul/client/files/consul.hcl.j2

{% endif %}

{% endif %}

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_service:

service.running:

- name: consul

- enable: True

- reload: True

- watch:

- file: /etc/consul.d/consul.hcl

{% if pillar['consul'] is defined %}

{% if pillar['consul']['prometheus_services'] is defined %}

{% for service in pillar['consul']['prometheus_services'] %}

/etc/consul.d/{{ service }}.hcl:

file.managed:

- source: salt://consul/services/files/{{ service }}.hcl

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_reload_{{ service }}:

cmd.run:

- name: consul reload

- watch:

- file: /etc/consul.d/{{ service }}.hcl

{% endfor %}

{% endif %}

{% endif %}

Most of the states in this file are identical to the SystemD version. Below we shall go over the differences: -

consul_package:

pkg.installed:

- pkgs:

- consul

- consul-openrc

Rather than retrieving the Consul binary directly from Hashicorp themselves, we install it from the Alpine package repository. Alpine’s packages are usually quite close to the latest version, so it makes sense to use them. We also install the consul-openrc package, which puts the correct openrc hooks in place, and installs a basic openrc file for Consul (although we are going to override it).

/etc/init.d/consul:

file.managed:

- source: salt://consul/client/files/consul-rc

- user: root

- group: root

- mode: 755

In this, we take replace the existing /etc/init.d/consul file with our own. The contents of this file are: -

#!/sbin/openrc-run

description="A tool for service discovery, monitoring and configuration"

description_checkconfig="Verify configuration files"

description_healthcheck="Check health status"

description_reload="Reload configuration"

extra_commands="checkconfig"

extra_started_commands="healthcheck reload"

command="/usr/sbin/$RC_SVCNAME"

command_args="$consul_opts -config-dir=/etc/consul.d"

command_user="$RC_SVCNAME:$RC_SVCNAME"

supervisor=supervise-daemon

pidfile="/run/$RC_SVCNAME.pid"

output_log="/var/log/$RC_SVCNAME.log"

error_log="/var/log/$RC_SVCNAME.log"

umask=027

respawn_max=0

respawn_delay=10

healthcheck_timer=60

depend() {

need net

after firewall

}

checkconfig() {

ebegin "Checking /etc/consul.d"

consul validate /etc/consul.d

eend $?

}

start_pre() {

checkconfig

checkpath -f -m 0640 -o "$command_user" "$output_log" "$error_log"

}

healthcheck() {

$command info > /dev/null 2>&1

}

reload() {

start_pre \

&& ebegin "Reloading $RC_SVCNAME configuration" \

&& supervise-daemon "$RC_SVCNAME" --signal HUP --pidfile "$pidfile"

eend $?

}

This file makes sure that we are pointing to the correct configuration directory (the original points to /etc/consul and /etc/consul.d).

/etc/consul/server.json:

file.absent

This state makes sure that /etc/consul/server.json is removed. With this, Alpine would try and start Consul as a server. While we have stopped the reference to /etc/consul in the OpenRC file, this makes sure that if this is ever overridden by a new version of consul-openrc, this node doesn’t try and become a Consul server.

After this, all the other states are identical. We do not need to specify the users and groups, as they are automatically created when installing the consul package. You could add the states in that check for the existence of these users and groups, but they will also come back as present anyway.

Our states/top.sls file looks like the below: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

'os:Alpine':

- match: grain

- consul.alpine

As you can see, we are matching the os grain, and applying the consul.alpine state if the host is running Alpine Linux. We can verify this with: -

$ salt 'salt*' grains.item os

salt-master.yetiops.lab:

----------

os:

Debian

$ salt 'alpine*' grains.item os

alpine-01.yetiops.lab:

----------

os:

Alpine

Pillars

The pillars are identical to those used in the SystemD states, as we do not do any matches against the Init system or the name of the OS, only that they are a Linux host.

Apply the states

You apply the states the same as before, using either matches against the name, or you could match against grains, e.g. salt -C 'G@os:Alpine' state.highstate

Consul - Void Linux

Void Linux uses an Init system called runit, which has links back to daemontools (created by Daniel J. Bernstein). Void is a popular option among those who do not favour SystemD as an Init system.

States

The state file for Void looks like the below: -

/srv/salt/states/consul/void.sls

consul_package:

pkg.installed:

- pkgs:

- consul

consul_user:

user.present:

- name: consul

- fullname: Consul

- shell: /bin/false

- home: /etc/consul.d

consul_group:

group.present:

- name: consul

/opt/consul:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/etc/consul.d:

file.directory:

- user: consul

- group: consul

- mode: 755

- makedirs: True

/run/runit/supervise.consul:

file.directory:

- user: root

- group: root

- mode: 0700

- makedirs: True

/etc/sv/consul:

file.directory:

- user: root

- group: root

- mode: 0700

- makedirs: True

/etc/sv/consul/run:

file.managed:

- source: salt://consul/client/files/consul-runit

- user: root

- group: root

- mode: 0755

/etc/sv/consul/supervise:

file.symlink:

- target: /run/runit/supervise.consul

- user: root

- group: root

- mode: 0777

/var/service/consul:

file.symlink:

- target: /etc/sv/consul

- user: root

- group: root

- mode: 0777

/etc/consul.d/consul.hcl:

file.managed:

{% if pillar['consul'] is defined %}

{% if pillar['consul']['server'] is defined %}

- source: salt://consul/server/files/consul.hcl.j2

{% else %}

- source: salt://consul/client/files/consul.hcl.j2

{% endif %}

{% endif %}

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_service:

service.running:

- name: consul

- enable: True

- watch:

- file: /etc/consul.d/consul.hcl

{% if pillar['consul'] is defined %}

{% if pillar['consul']['prometheus_services'] is defined %}

{% for service in pillar['consul']['prometheus_services'] %}

/etc/consul.d/{{ service }}.hcl:

file.managed:

- source: salt://consul/services/files/{{ service }}.hcl

- user: consul

- group: consul

- mode: 0640

- template: jinja

consul_reload_{{ service }}:

cmd.run:

- name: consul reload

- watch:

- file: /etc/consul.d/{{ service }}.hcl

{% endfor %}

{% endif %}

{% endif %}

The first few states in this are similar to Alpine and SystemD (installing the consul package, creating users and directories). After this though, most of the differences runit-specific. Rather than using something like SystemD unit files, Sysvinit service files or OpenRC files, runit uses directories, shell scripts and symbolic links, along with a supervise method to ensure a service is always running.

/run/runit/supervise.consul:

file.directory:

- user: root

- group: root

- mode: 0700

- makedirs: True

First, we create the /run/runit/supervise.consul directory. When running, Consul uses this directory to show the state of the process: -

[root@void-01 supervise.consul]# pwd

/run/runit/supervise.consul

[root@void-01 supervise.consul]# ls

control lock ok pid stat status

This includes lock files, status, the pid file and more.

/etc/sv/consul:

file.directory:

- user: root

- group: root

- mode: 0700

- makedirs: True

We create the Consul service directory in the /etc/sv directory, which is where all available (but not necessarily running) services reside.

/etc/sv/consul/run:

file.managed:

- source: salt://consul/client/files/consul-runit

- user: root

- group: root

- mode: 0755

The above adds the file /srv/salt/states/consul/client/files/consul-runit/ as /etc/sv/consul/run. The contents of this file are: -

#!/bin/sh

exec consul agent -config-dir /etc/consul.d

This is little more than a shell script that runit supervises (i.e. makes sure it is running).

/var/service/consul:

file.symlink:

- target: /etc/sv/consul

- user: root

- group: root

- mode: 0777

The above creates a symbolic link from /var/service/consul to /etc/sv/consul. This is what tells the Void machine that we want to run this service.

The only other difference we have is that Salt doesn’t seem to be able to reload the service when on Void, so we remove the reload: True line from the service.running state.

After this, everything else is the same as Alpine and SystemD-based systems. We can verify the service is running using: -

$ sv status consul

run: consul: (pid 709) 1718s

Our states/top.sls file looks like the below: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

'os:Alpine':

- match: grain

- consul.alpine

'os:Void':

- match: grain

- consul.void

Again, we’re matching the os grain. We can verify this with: -

$ salt 'void*' grains.item os

void-01.yetiops.lab:

----------

os:

Void

$ salt 'suse*' grains.item os

suse-01.yetiops.lab:

----------

os:

SUSE

Consul - Verification

After this, we can verify Consul is running on all nodes using the consul command: -

$ consul members

Node Address Status Type Build Protocol DC Segment

salt-master 10.15.31.5:8301 alive server 1.7.3 2 yetiops <all>

alpine-01 10.15.31.27:8301 alive client 1.7.3 2 yetiops <default>

arch-01 10.15.31.26:8301 alive client 1.7.3 2 yetiops <default>

centos-01.yetiops.lab 10.15.31.24:8301 alive client 1.7.3 2 yetiops <default>

suse-01 10.15.31.22:8301 alive client 1.7.3 2 yetiops <default>

ubuntu-01 10.15.31.33:8301 alive client 1.7.3 2 yetiops <default>

void-01 10.15.31.31:8301 alive client 1.7.2 2 yetiops <default>

$ consul catalog nodes -service node_exporter

Node ID Address DC

alpine-01 e59eb6fc 10.15.31.27 yetiops

arch-01 97c67201 10.15.31.26 yetiops

centos-01.yetiops.lab 78ac8405 10.15.31.24 yetiops

salt-master 344fb6f2 10.15.31.5 yetiops

suse-01 d2fdd88a 10.15.31.22 yetiops

ubuntu-01 4544c7ff 10.15.31.33 yetiops

void-01 e99c7e3c 10.15.31.31 yetiops

Node Exporter - SystemD

While we have Consul running, and it has the node_exporter Consul service for all our nodes, we still need to install the Prometheus node_exporter as well.

States

The following Salt state is used to deploy the Prometheus Node Exporter onto a SystemD-based host: -

/srv/salt/states/exporters/node_exporter/systemd.sls

{% if not salt['file.file_exists']('/usr/local/bin/node_exporter') %}

retrieve_node_exporter:

cmd.run:

- name: wget -O /tmp/node_exporter.tar.gz https://github.com/prometheus/node_exporter/releases/download/v0.18.1/node_exporter-0.18.1.linux-amd64.tar.gz

extract_node_exporter:

archive.extracted:

- name: /tmp

- enforce_toplevel: false

- source: /tmp/node_exporter.tar.gz

- archive_format: tar

- user: root

- group: root

move_node_exporter:

file.rename:

- name: /usr/local/bin/node_exporter

- source: /tmp/node_exporter-0.18.1.linux-amd64/node_exporter

delete_node_exporter_dir:

file.absent:

- name: /tmp/node_exporter-0.18.1.linux-amd64

delete_node_exporter_files:

file.absent:

- name: /tmp/node_exporter.tar.gz

{% endif %}

node_exporter_user:

user.present:

- name: node_exporter

- fullname: Node Exporter

- shell: /bin/false

node_exporter_group:

group.present:

- name: node_exporter

/opt/prometheus/exporters/dist/textfile:

file.directory:

- user: node_exporter

- group: node_exporter

- mode: 755

- makedirs: True

/etc/systemd/system/node_exporter.service:

file.managed:

- source: salt://exporters/node_exporter/files/node_exporter.service.j2

- user: root

- group: root

- mode: 0644

- template: jinja

{% if 'CentOS' in grains['os'] %}

node_exporter_selinux_fcontext:

selinux.fcontext_policy_present:

- name: '/usr/local/bin/node_exporter'

- sel_type: bin_t

node_exporter_selinux_fcontext_applied:

selinux.fcontext_policy_applied:

- name: '/usr/local/bin/node_exporter'

{% endif %}

node_exporter_service_reload:

cmd.run:

- name: systemctl daemon-reload

- watch:

- file: /etc/systemd/system/node_exporter.service

node_exporter_service:

service.running:

- name: node_exporter

- enable: True

{% if ('SUSE' in grains['os'] or 'CentOS' in grains['os']) %}

node_exporter_firewalld_service:

firewalld.service:

- name: node_exporter

- ports:

- 9100/tcp

node_exporter_firewalld_rule:

firewalld.present:

- name: public

- services:

- node_exporter

{% endif %}

There are a lot of similarities to the Consul state, but some key differences.

{% if not salt['file.file_exists']('/usr/local/bin/node_exporter') %}

retrieve_node_exporter:

cmd.run:

- name: wget -O /tmp/node_exporter.tar.gz https://github.com/prometheus/node_exporter/releases/download/v0.18.1/node_exporter-0.18.1.linux-amd64.tar.gz

extract_node_exporter:

archive.extracted:

- name: /tmp

- enforce_toplevel: false

- source: /tmp/node_exporter.tar.gz

- archive_format: tar

- user: root

- group: root

move_node_exporter:

file.rename:

- name: /usr/local/bin/node_exporter

- source: /tmp/node_exporter-0.18.1.linux-amd64/node_exporter

delete_node_exporter_dir:

file.absent:

- name: /tmp/node_exporter-0.18.1.linux-amd64

delete_node_exporter_files:

file.absent:

- name: /tmp/node_exporter.tar.gz

{% endif %}

This state checks to see if the node_exporter binary exists in /usr/local/bin. If it does, all of this is skipped. If it isn’t, we first retrieve the file via wget and place it into our /tmp directory on the host. Then, we extract it. After that, we move the file /tmp/node_exporter-0.18.1.linux-amd64/node_exporter (i.e. the binary inside the Tarball we just downloaded) to /usr/local/bin. Finally, we remove the directory created by extracting the tarball, and the tarball itself.

We could have done all of this through the archive.extracted task instead, but we still need to manipulate the contents of the archive so there is very little benefit here. Also, if you use something like a proxy, archive.extracted cannot be told to use it, so this method will work in those cases.

/opt/prometheus/exporters/dist/textfile:

file.directory:

- user: node_exporter

- group: node_exporter

- mode: 755

- makedirs: True

After we create the node_exporter user and group, we also create the /opt/prometheus/exporters/dist/textfile directory. This is so that we can make use of the Node Exporter’s textfile collector if we need to.

/etc/systemd/system/node_exporter.service:

file.managed:

- source: salt://exporters/node_exporter/files/node_exporter.service.j2

- user: root

- group: root

- mode: 0644

- template: jinja

The above is similar to what we configure for Consul’s SystemD unit file. The contents of the file are: -

[Unit]

Description=Node Exporter

After=network.target

[Service]

User=node_exporter

Group=node_exporter

Type=simple

ExecStart=/usr/local/bin/node_exporter --collector.systemd --collector.textfile --collector.textfile.directory=/opt/prometheus/exporters/dist/textfile

[Install]

WantedBy=multi-user.target

The only major parts to point out are that we enable the SystemD collector (disabled by default) and enable the Textfile collector, pointing at the directory we created previously.

{% if 'CentOS' in grains['os'] %}

node_exporter_selinux_fcontext:

selinux.fcontext_policy_present:

- name: '/usr/local/bin/node_exporter'

- sel_type: bin_t

node_exporter_selinux_fcontext_applied:

selinux.fcontext_policy_applied:

- name: '/usr/local/bin/node_exporter'

{% endif %}

On CentOS (and RHEL), if you try to run the Node Exporter it will fail, as SELinux is denying it from running. You will see messages like this in the logs for node_exporter: -

centos-01 systemd[1]: Started /usr/bin/systemctl start node_exporter.service.

centos-01 systemd[2101]: node_exporter.service: Failed to execute command: Permission denied

centos-01 systemd[2101]: node_exporter.service: Failed at step EXEC spawning /usr/local/bin/node_exporter: Permission denied

centos-01 systemd[1]: node_exporter.service: Main process exited, code=exited, status=203/EXEC

centos-01 systemd[1]: node_exporter.service: Failed with result 'exit-code'.

centos-01 systemd[1]: Started /usr/bin/systemctl enable node_exporter.service.

centos-01 setroubleshoot[2108]: SELinux is preventing /usr/lib/systemd/systemd from execute access on the file node_exporter. For complete SELinux messages run: sealert -l 580a42ae-d512-4280-8cca-282716ed59b2

This is because /usr/local/bin/node_exporter does not have the right SELinux context. You need to set a policy for node_exporter to type bin_t and then apply it. After this, it will run without issues.

node_exporter_service_reload:

cmd.run:

- name: systemctl daemon-reload

- watch:

- file: /etc/systemd/system/node_exporter.service

Salt by itself should attempt daemon-reload when a SystemD unit file changes (i.e. picking up the latest version of the unit), however we run it as a separate task as well. We may want to add additional collectors later, and so we will need a daemon-reload to pick up the latest changes. By the same token, we didn’t do this for Consul as it is very unlikely we’ll need to change the SystemD unit file often (if at all).

{% if ('SUSE' in grains['os'] or 'CentOS' in grains['os']) %}

node_exporter_firewalld_service:

firewalld.service:

- name: node_exporter

- ports:

- 9100/tcp

node_exporter_firewalld_rule:

firewalld.present:

- name: public

- services:

- node_exporter

{% endif %}

The final section configures the host firewall on CentOS and OpenSUSE. This is because by default, CentOS and OpenSUSE come with firewalld (and may be enabled in your environment), so we need to make sure it is allowed through the firewall on the host. If you use different zones, or tell node_exporter to run on a different port, you would need to change this state. Otherwise, this should cover all you need to allow connections to the node_exporter daemon running on the host.

Our states/top.sls file looks like the below: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

- exporters.node_exporter.systemd

'os:Alpine':

- match: grain

- consul.alpine

'os:Void':

- match: grain

- consul.void

Pillars

There are no pillars for the node_exporter state. If you want to specify different ports or listen addresses, you could customize it using pillars, but I am running it mostly at it’s defaults.

Node Exporter - Alpine

States

The state file for Alpine looks like the below: -

/srv/salt/states/exporters/node_exporters/alpine.sls

{% if not salt['file.file_exists']('/usr/local/bin/node_exporter') %}

retrieve_node_exporter:

cmd.run:

- name: wget -O /tmp/node_exporter.tar.gz https://github.com/prometheus/node_exporter/releases/download/v0.18.1/node_exporter-0.18.1.linux-amd64.tar.gz

extract_node_exporter:

archive.extracted:

- name: /tmp

- enforce_toplevel: false

- source: /tmp/node_exporter.tar.gz

- archive_format: tar

- user: root

- group: root

move_node_exporter:

file.rename:

- name: /usr/local/bin/node_exporter

- source: /tmp/node_exporter-0.18.1.linux-amd64/node_exporter

delete_node_exporter_dir:

file.absent:

- name: /tmp/node_exporter-0.18.1.linux-amd64

delete_node_exporter_files:

file.absent:

- name: /tmp/node_exporter.tar.gz

{% endif %}

node_exporter_user:

cmd.run:

- name: adduser -H -D -s /bin/false node_exporter

/opt/prometheus/exporters/dist/textfile:

file.directory:

- user: node_exporter

- group: node_exporter

- mode: 755

- makedirs: True

/var/log/node_exporter:

file.directory:

- user: node_exporter

- group: node_exporter

- mode: 755

- makedirs: True

/etc/init.d/node_exporter:

file.managed:

- source: salt://exporters/node_exporter/files/node_exporter.openrc

- dest: /etc/init.d/node_exporter

- mode: '0755'

- user: root

- group: root

node_exporter_service:

service.running:

- name: node_exporter

- enable: true

- state: restarted

- watch:

- file: /etc/init.d/node_exporter

Most of this state is similar to the SystemD state, with a few notable differences.

node_exporter_user:

cmd.run:

- name: adduser -H -D -s /bin/false node_exporter

Unfortunately the user.present state does not work correctly on Alpine. This is because useradd does not exist on Alpine, which is what user.present is using in the background. A GitHub issue exists here for this. Instead, we use the cmd.run module (essentially running a shell command on the host) to add the user instead.

/var/log/node_exporter:

file.directory:

- user: node_exporter

- group: node_exporter

- mode: 755

- makedirs: True

Unlike SystemD (which places logs into binary journal files), Alpine uses plain log files in /var/log. We create a node_exporter directory, rather than just having a /var/log/node_exporter.log file.

/etc/init.d/node_exporter:

file.managed:

- source: salt://exporters/node_exporter/files/node_exporter.openrc

- dest: /etc/init.d/node_exporter

- mode: '0755'

- user: root

- group: root

Again, Alpine uses OpenRC for its Init system. We need to use an OpenRC service file for node_exporter. The contents of this file are: -

#!/sbin/openrc-run

description="Prometheus machine metrics exporter"

pidfile=${pidfile:-"/run/${RC_SVCNAME}.pid"}

user=${user:-${RC_SVCNAME}}

group=${group:-${RC_SVCNAME}}

command="/usr/local/bin/node_exporter"

command_args="--collector.textfile --collector.textfile.directory=/opt/prometheus/exporters/dist/textfile"

command_background="true"

start_stop_daemon_args="--user ${user} --group ${group} \

--stdout /var/log/node_exporter/${RC_SVCNAME}.log \

--stderr /var/log/node_exporter/${RC_SVCNAME}.log"

depend() {

after net

}

This tells the Node Exporter to run, and we enable the textfile collector as well. We don’t enable the SystemD collector (due to no SystemD!).

Besides that, everything else is relatively similar to the SystemD state.

Our states/top.sls file looks like the below: -

base:

'G@init:systemd and G@kernel:Linux':

- match: compound

- consul

- exporters.node_exporter.systemd

'os:Alpine':

- match: grain

- consul.alpine

- exporters.node_exporter.alpine

'os:Void':

- match: grain

- consul.void

Pillars

Again, there are no pillars for the node_exporter, so we do not need to apply any.

Node Exporter - Void

States

The state file for Void looks like the below: -

node_exporter_package:

pkg.installed:

- pkgs:

- node_exporter

node_exporter_service:

service.running:

- name: node_exporter

- enable: true

Compared to the previous states, this is much simpler. This is because there is already a working node_exporter package in the Void Linux repositories, so we just install it and enable it.

Pillars

Again, there are no pillars for the node_exporter, so we do not need to apply any.

Node Exporter - Verification

We can verify whether the Node Exporter is available on the hosts by using something like curl or a web browser to check that they are working: -

# salt-master (Debian)

$ curl 10.15.31.5:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="salt-master",release="4.19.0-9-amd64",sysname="Linux",version="#1 SMP Debian 4.19.118-2 (2020-04-29)"} 1

# alpine-01 (Alpine Linux)

$ curl 10.15.31.27:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="alpine-01",release="5.4.43-0-lts",sysname="Linux",version="#1-Alpine SMP Thu, 28 May 2020 09:59:32 UTC"} 1

# arch-01 (Arch Linux)

$ curl 10.15.31.26:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="arch-01",release="5.6.14-arch1-1",sysname="Linux",version="#1 SMP PREEMPT Wed, 20 May 2020 20:43:19 +0000"} 1

# centos-01 (CentOS)

$ curl 10.15.31.24:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="centos-01.yetiops.lab",release="4.18.0-147.8.1.el8_1.x86_64",sysname="Linux",version="#1 SMP Thu Apr 9 13:49:54 UTC 2020"} 1

# suse-01 (OpenSUSE Tumbleweed)

$ curl 10.15.31.22:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="",machine="x86_64",nodename="suse-01",release="5.6.12-1-default",sysname="Linux",version="#1 SMP Tue May 12 17:44:12 UTC 2020 (9bff61b)"} 1

# ubuntu-01 (Ubuntu)

$ curl 10.15.31.33:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="ubuntu-01",release="5.4.0-33-generic",sysname="Linux",version="#37-Ubuntu SMP Thu May 21 12:53:59 UTC 2020"} 1

# void-01 (Void Linux)

$ curl 10.15.31.31:9100/metrics | grep -Ei "^node_uname_info"

node_uname_info{domainname="(none)",machine="x86_64",nodename="void-01",release="5.3.9_1",sysname="Linux",version="#1 SMP PREEMPT Wed Nov 6 15:01:52 UTC 2019"} 1

Configuring Prometheus

Configuring Prometheus to use Consul for service discovery is covered in this post. You can choose many methods for generating the Prometheus configuration, including config management (like Ansible or SaltStack), embedding it within a Docker container, or using something like the Kubernetes Prometheus Operator.

Using Salt

Below is a sample state for how to configure it using SaltStack, using Docker to run Prometheus within a container. In this, we generate the configuration on the host (rather than inside the container). This allows us to make changes to the configuration with Salt, without needing to restart the container, or run the Salt agent from within the container itself.

docker_service:

service.running:

- name: docker

- enable: true

- reload: true

/etc/prometheus:

file.directory:

- user: nobody

- group: nogroup

- mode: 755

- makedirs: True

/etc/alertmanager:

file.directory:

- user: nobody

- group: nogroup

- mode: 755

- makedirs: True

/etc/prometheus/alerts:

file.directory:

- user: nobody

- group: nogroup

- mode: 755

- makedirs: True

/etc/prometheus/rules:

file.directory:

- user: nobody

- group: nogroup

- mode: 755

- makedirs: True

/etc/prometheus/prometheus.yml:

file.managed:

- source: salt://prometheus/files/prometheus.yml.j2

- user: nobody

- group: nogroup

- mode: 0640

- template: jinja

prometheus_docker_volume:

docker_volume.present:

- name: prometheus

- driver: local

prometheus-container:

docker_container.running:

- name: prometheus

- image: prom/prometheus:latest

- restart_policy: always

- port_bindings:

- 9090:9090

- binds:

- prometheus:/prometheus:rw

- /etc/prometheus:/etc/prometheus:rw

- entrypoint:

- prometheus

- --config.file=/etc/prometheus/prometheus.yml

- --storage.tsdb.path=/prometheus

- --web.console.libraries=/usr/share/prometheus/console_libraries

- --web.console.templates=/usr/share/prometheus/consoles

- --web.enable-lifecycle

prometheus_config_reload:

cmd.run:

- name: curl -X POST http://localhost:9090/-/reload

- onchanges:

- file: /etc/prometheus/prometheus.yml

For those familiar with Docker, you might wonder why we are using bind mounts for the containers. Currently, SaltStack’s docker_container seems to mount volumes as the root user, at which point the containers cannot write to them. The Prometheus process inside the container runs as the user nobody and the group nogroup, which cannot write to a file or folder owned by root.

When using bind mounts, the permissions from the host operating system are inherited by the container. This means we can set the the permissions of the directories on the host to be nobody:nogroup, and the Prometheus container can write to them.

We also use the flag web.enable-lifecycle so that we can trigger a reload of the configuration from the API. Without this, we would need to send a SIGHUP to the process inside the container or restart the process/container in some way.

The contents of /srv/salt/states/prometheus/files/prometheus.yml.j2 file are: -

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets:

- 'localhost:9090'

{%- if pillar['prometheus'] is defined %}

{%- if pillar['prometheus']['consul_clusters'] is defined %}

{%- for cluster in pillar['prometheus']['consul_clusters'] %}

- job_name: '{{ cluster["job"] }}'

consul_sd_configs:

{%- for server in cluster['servers'] %}

- server: '{{ server }}'

{%- endfor %}

relabel_configs:

{%- for tag in cluster['tags'] %}

- source_labels: [__meta_consul_tags]

regex: .*,{{ tag['name'] }},.*

action: keep

{%- endfor %}

- source_labels: [__meta_consul_service]

target_label: job

{%- endfor %}

{%- endif %}

{%- endif %}

To explain what is happening here, we are saying that: -

- If a prometheus pillar is defined then…

- If a consul_clusters variable is inside the prometheus pillar then…

- For each Consul Cluster defined in consul_clusters

- Create a job named

{{ cluster["job"] }} - Add all of the defined Consul servers from that cluster to the job

- Keep all targets that match the defined tag(s)

- Replace the contents of the label

jobwith the contents of the__meta_consul_servicemetadata

- Create a job named

So if we had the below pillars: -

prometheus:

consul_clusters:

- job: consul-dc

servers:

- consul-01.yetiops.lab:8500

- consul-02.yetiops.lab:8500

- consul-03.yetiops.lab:8500

tags:

- name: prometheus

This would generate: -

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets:

- 'localhost:9090'

- job_name: 'prometheus'

consul_sd_configs:

- server: 'consul-01.yetiops.lab:8500'

- server: 'consul-02.yetiops.lab:8500'

- server: 'consul-03.yetiops.lab:8500'

relabel_configs:

- source_labels: [__meta_consul_tags]

regex: .*,prometheus,.*

action: keep

- source_labels: [__meta_consul_service]

target_label: job

You could expand the template to include different metrics paths or different scrape_interval times (i.e. how long between each attempt to pull metrics from a target), as seen below: -

%- for cluster in pillar['prometheus']['consul_clusters'] %}

- job_name: '{{ cluster["job"] }}'

{%- if cluster['scrape_interval'] is defined %}

scrape_interval: {{ cluster['scrape_interval'] }}

{%- endif %}

{%- if cluster['metrics_path'] is defined %}

metrics_path: {{ cluster['metrics_path'] }}

{%- endif %}

Some exporters take longer to retrieve metrics than others (snmp_exporter is a good example, depending on the host(s) it targets), so you may want to increase the interval of how often it scrapes metrics.

Other exporters use a different path than /metrics. For example, this Proxmox PVE exporter uses the path /pve (i.e. http://192.168.0.1:9221/pve rather than http://192.168.0.1:9221/metrics) so the Prometheus job would need to know to try a different path when attempting to gather metrics.

You can then separate out these jobs using different Consul tags (e.g. prometheus-long or prometheus-proxmox) so that the additional jobs target only the hosts and exporters with those tags, and are ignored by the other jobs.

Prometheus Targets

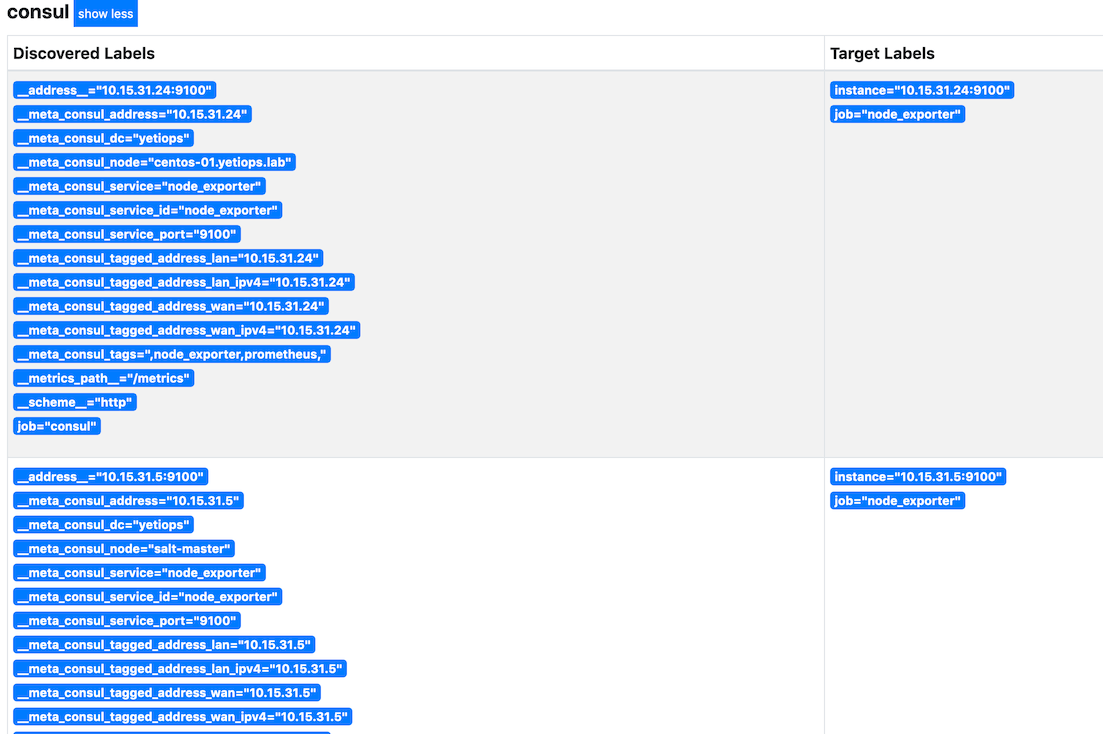

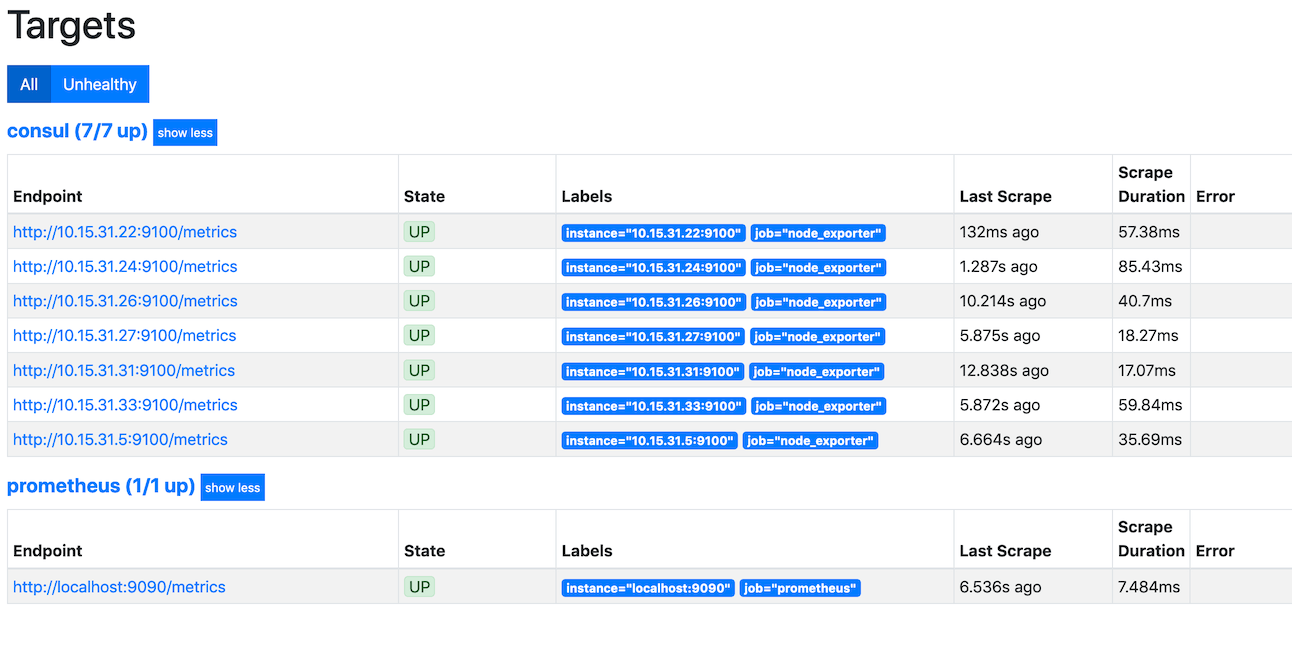

After we have configured all of the above, we should be able to see all of the hosts in Prometheus: -

There they are!

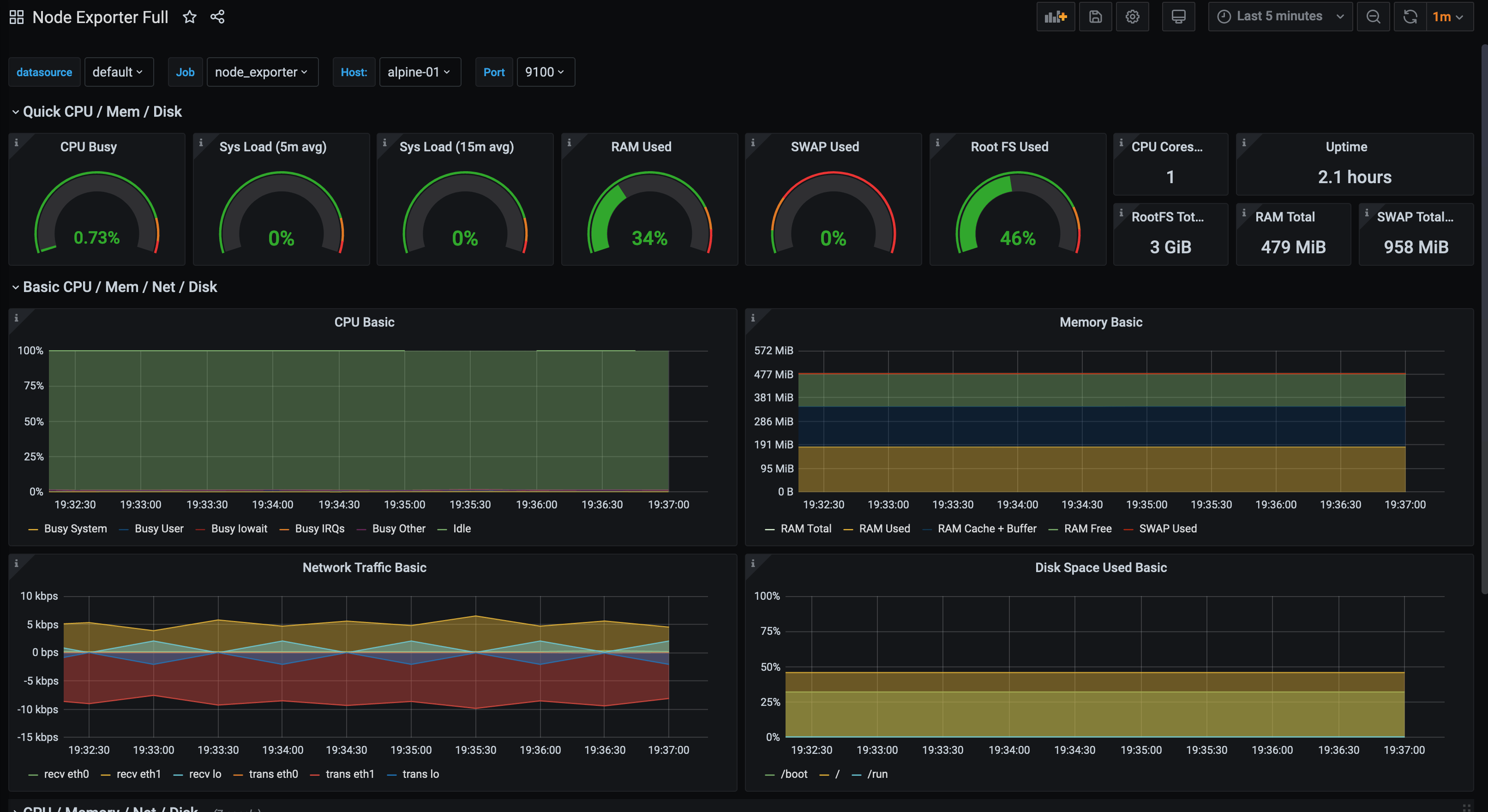

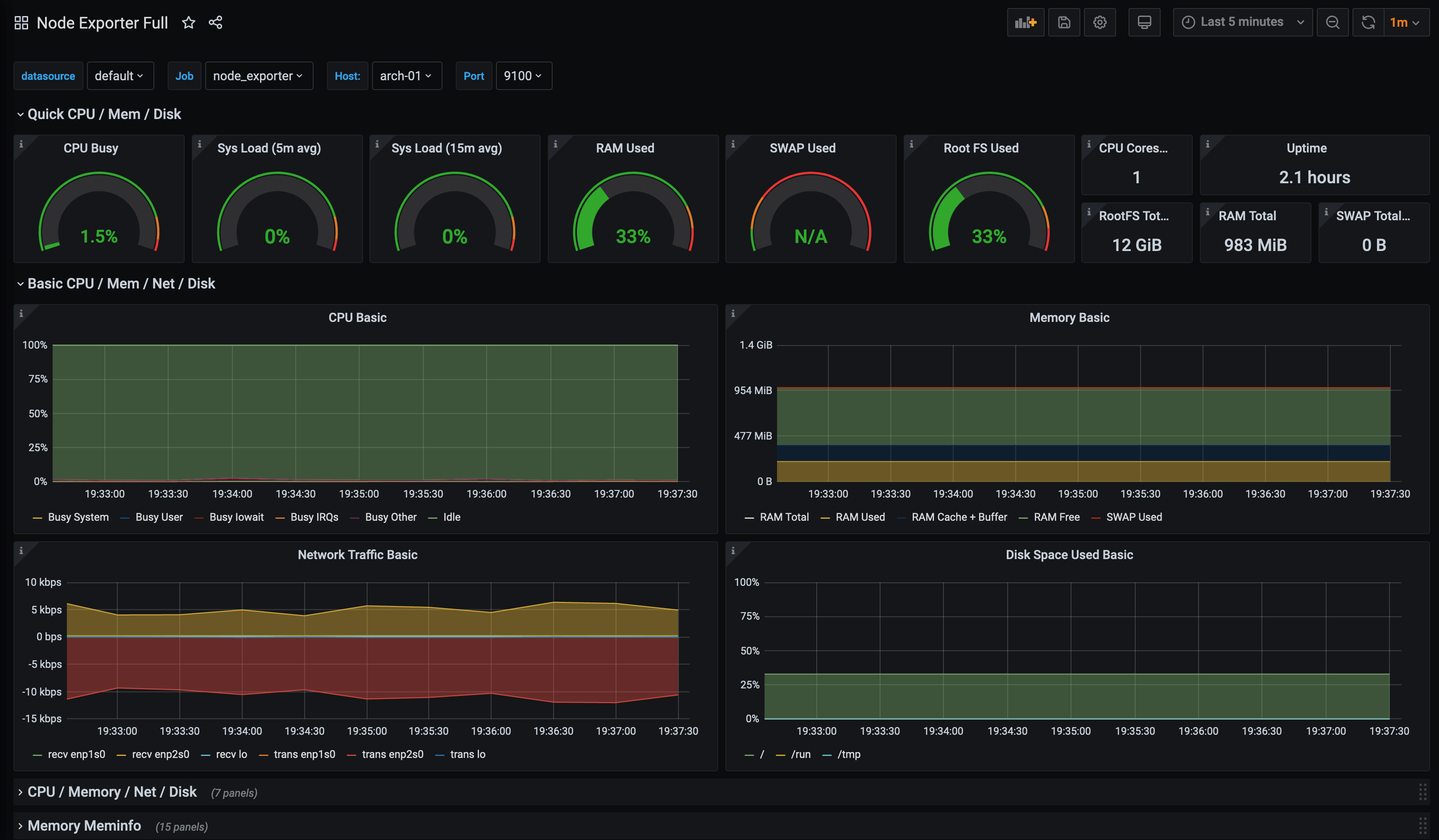

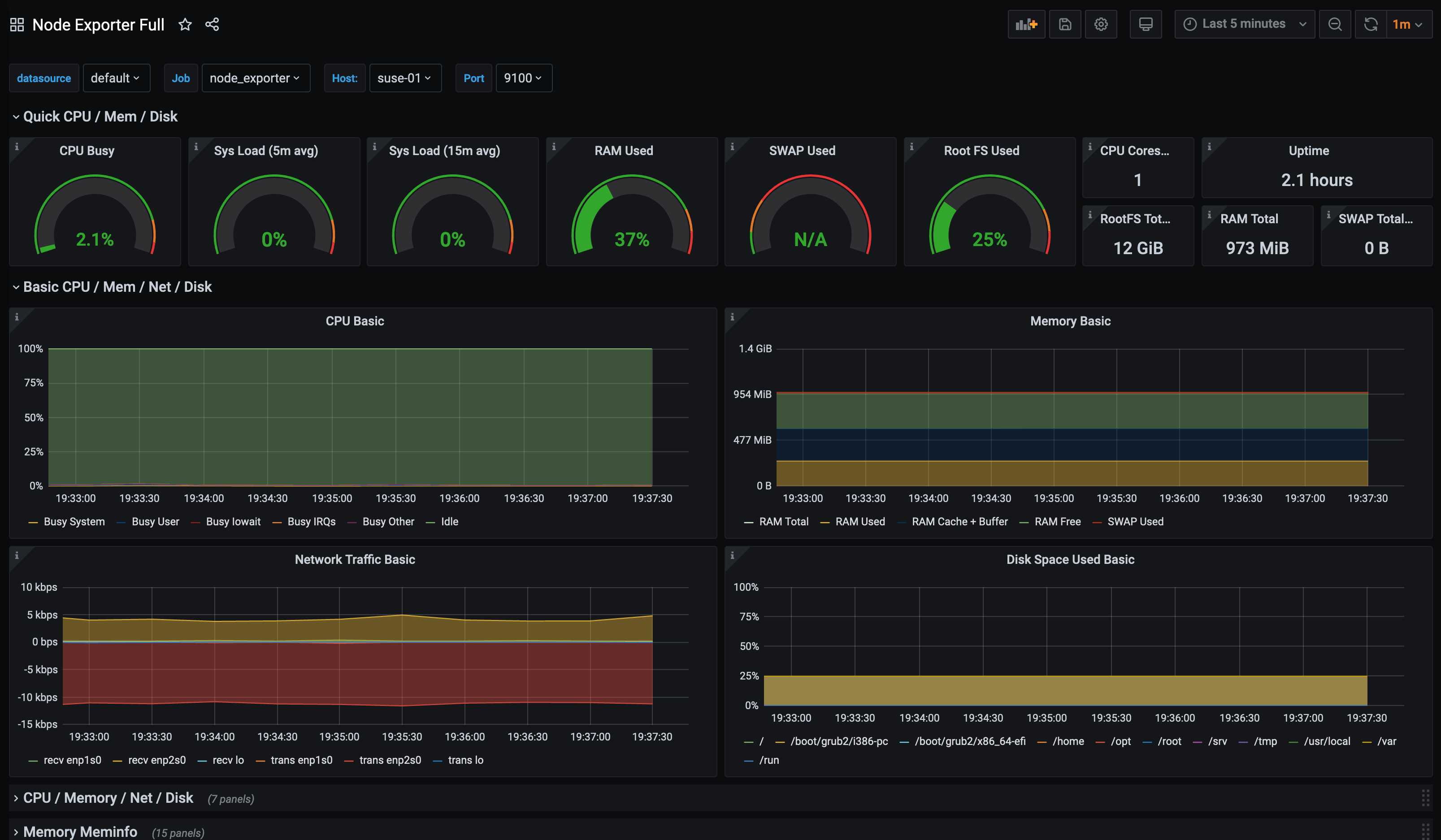

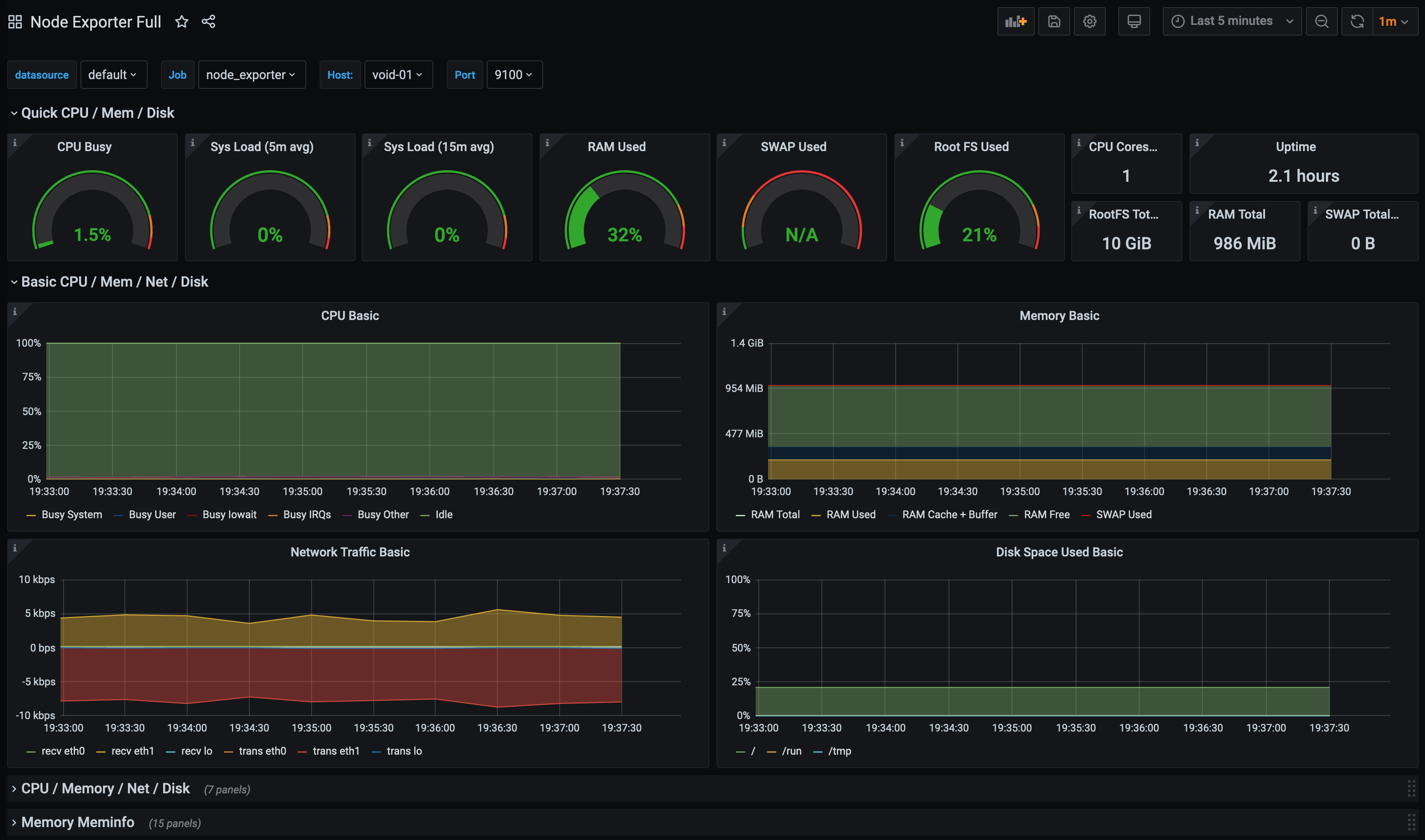

We can then use Grafana (with Prometheus as a data source) to create dashboards from Prometheus metrics. Alternatively, you can make use of existing Grafana dashboards created by other Grafana users. A good starting point for using with Prometheus’s Node Exporter is this one by rfrail3: -

Alpine Linux

Arch Linux

OpenSUSE Linux

Void Linux

Git Repository

All of the states (as well as those for future posts, if you want a quick preview) are available in my Salt Lab repository.

Summary

One of the joys of configuration management is that when you add new hosts into your infrastructure, you have states/playbooks/recipes that deploy your base packages, manage users and more. This means you no longer have to do all of this manually every time you add a new host.

If you then also deploy Consul (and the appropriate Consul service declarations) as we have above, these additional hosts also are ingested into your monitoring system automatically too. The benefits of this are massive, saving time and reducing mistakes (forgetting to add hosts into monitoring, deploying the wrong type of monitoring etc).

In the next post in this series, we will cover how you deploy SaltStack on a Windows host, which will then deploy Consul and the Prometheus Windows Exporter.

devops monitoring prometheus consul saltstack linux

technical prometheus monitoring config management

8025 Words

2020-06-15 12:19 +0000