35 minutes

Pulumi: Deploying infrastructure on other providers

In a previous post, I covered using Pulumi for Infrastructure-as-Code as an alternative to Terraform. The post focussed on building an AWS EC2 (a virtual machine in AWS’s cloud) to demonstrate the differences between not only Terraform and Pulumi, but also between using Python, Go or Typescript with Pulumi.

In this post, I am going to cover building machines on four different providers, all using Go. As mentioned in the previous post, Go is my favourite language to write in, so defining infrastructure using it is an enjoyable experience. However the approach used could be applied to any of the other supported languages too (Python, Typescript, Javascript or DotNet).

Providers

The providers we are going to build infrastructure on are: -

- Digital Ocean - As covered in this post using Terraform

- Hetzner Cloud - As covered in this post using Terraform

- We also are now using the new Hetzner Firewalls feature (which wasn’t available when writing the previous post)

- Equinix Metal - Formerly known as Packet, providing bare-metal as a service with a very Cloud-like experience

- Linode - Linux-focussed VPSs, Kubernetes Clusters, Object Storage and more

I chose these for a variety of reasons, but mostly to show the breadth of support that Pulumi has for a number of providers, as well as making it clear that you don’t need to be running complex cloud infrastructure to make use of Pulumi.

Digital Ocean

Pulumi Documentation: here

If you don’t have an API key with Digital Ocean already, you can follow the instructions here to create a project and generate a key for use with Pulumi. You can then choose whether you store your token in the Pulumi configuration, or exposing it as an environment variable that Pulumi will use.

Run the following to create a stack and project for the code: -

$ pulumi new digitalocean-go --name do-go

This will ask for a name of the stack. I chose staging, but this is entirely up to you. To add your API token to the configuration, run the following: -

$ pulumi config set digitalocean:token XXXXXXXXXXXXXX --secret

This will be stored in your configuration as an encrypted secret. I prefer to use environment variables, exporting it like so: -

$ export DIGITALOCEAN_TOKEN="XXXXXXXXXXXXXX"

In addition, we’ll set our common_name variable, to be used across our resources: -

$ pulumi config set common_name yetiops-prom

Now we can build our infrastructure.

The code

Like in the previous Digital Ocean post, we will build the following: -

- A Digital Ocean Droplet (VPS) running Ubuntu 20.04, with the Prometheus

node_exporterinstalled (usingcloud-config) - An SSH key that will be added to the VPS

- Some tags assigned to the instance

- A firewall to limit what can and cannot communicate with our instance

First, we reference our common_name variable to be used by the resources: -

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

After this, we will define our cloudconfig configuration as we did for our AWS EC2 in the previous Pulumi post.

I have changed the approach slightly, in that our template is now stored in a separate file (rather than a multi-line variable). The location is also defined using another configuration variable, set with pulumi config set cloud_init_path "files/ubuntu.tpl". Now we can update the configuration and location without requiring code changes in future.

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

If we decide we want to install more packages, add users, or use any other feature of cloudinit, we update the template file instead.

Next we define an SSH key that we will use to login to this instance: -

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := digitalocean.NewSshKey(ctx, commonName, &digitalocean.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

This is very similar to what was defined in our AWS post, with just a couple of field names changed.

Many of the Pulumi providers use very similar definitions, fields and arguments, meaning that we can reuse a lot of code across different providers. It also means that we could create reusable functions/packages that can be imported into the stacks, with only the Pulumi-specific code in our main.go files. This cuts down on duplication, and allow us to make changes to a function in one place, meaning all dependent Pulumi stacks would also benefit from the changes.

We use the same function we defined before to get our IP address: -

func getMyIp() (string, error) {

resp, err := http.Get("https://ifconfig.co")

if err != nil {

return "", err

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

return "", err

}

MyIp := strings.TrimSuffix(string(body), "\n")

return MyIp, nil

}

[...]

myIp, err := getMyIp()

if err != nil {

return err

}

myIpCidr := fmt.Sprintf("%v/32", myIp)

This retrieves our public IPv4 address, allowing us to limit what machine(s) will be able to login to the Droplet.

Next, we define tags that we want to apply to the Droplet: -

promtag, err := digitalocean.NewTag(ctx, "prometheus", &digitalocean.TagArgs{

Name: pulumi.String("prometheus"),

})

if err != nil {

return err

}

nodeex_tag, err := digitalocean.NewTag(ctx, "node_exporter", &digitalocean.TagArgs{

Name: pulumi.String("node_exporter"),

})

if err != nil {

return err

}

Now that these are defined, we can configure our Droplet: -

droplet, err := digitalocean.NewDroplet(ctx, commonName, &digitalocean.DropletArgs{

Name: pulumi.String(commonName),

Image: pulumi.String("ubuntu-20-04-x64"),

Region: pulumi.String("fra1"),

Size: pulumi.String("s-1vcpu-1gb"),

UserData: pulumi.String(cloudconfig.Rendered),

SshKeys: pulumi.StringArray{

pulumi.StringOutput(sshkey.Fingerprint),

},

Tags: pulumi.StringArray{

promtag.ID(),

nodeex_tag.ID(),

},

})

This is very similar to how we would define the EC2 in the previous Pulumi post. The main difference is that Digital Ocean have a validated set of images that you choose, rather than a vast array of AMIs that practically anyone can create. We also must supply tags as resources rather than just key-value pairs like in AWS.

Finally, we define our firewall: -

droplet_id := pulumi.Sprintf("%v", droplet.ID())

_, err = digitalocean.NewFirewall(ctx, commonName, &digitalocean.FirewallArgs{

Name: pulumi.String(commonName),

DropletIds: pulumi.IntArray{

droplet_id.ApplyInt(func(id string) int {

var idInt int

idInt, err := strconv.Atoi(id)

if err != nil {

fmt.Println(err)

return idInt

}

return idInt

}),

},

InboundRules: digitalocean.FirewallInboundRuleArray{

digitalocean.FirewallInboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("22"),

SourceAddresses: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

digitalocean.FirewallInboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("9100"),

SourceAddresses: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

},

OutboundRules: digitalocean.FirewallOutboundRuleArray{

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("1-65535"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("udp"),

PortRange: pulumi.String("1-65535"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("icmp"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

},

})

There a few parts to explain here. We create this after the Droplet because Digital Ocean Firewalls require a list of Droplets they apply to, rather than telling the Droplet what firewalls apply to it. This is a subtle difference from AWS, Azure or other providers, but worth taking into account.

Also, the Digital Ocean provider returns the ID of the Droplet as a Go type of pulumi.IDOutput, but we need to supply this to the NewFirewall function as an integer. To translate between the two, we need to first turn the IDOutput into a StringOutput using: -

droplet_id := pulumi.Sprintf("%v", droplet.ID())

After this, we need to convert this into an IntOutput. This is achieve using Pulumi’s Apply functionality. The apply function will take the StringOutput value and turn it into another form of Output (in this case, IntOutput). The reason that this doesn’t return a standard Go Int type is that it also maintains dependencies and information associated with the original Output value (including when it will be computed and what it refers to). Without this, the value may be computed before it is available, as well as losing any history/attributes of the original Output value.

There is a lot to process here, and it has taken a few attempts at different functions for me to find the right way to do this. Once you realise the motivation behind using the Apply functions rather than just raw values though, it can help you understand how to build them

The code for this is: -

DropletIds: pulumi.IntArray{

droplet_id.ApplyInt(func(id string) int {

var idInt int

idInt, err := strconv.Atoi(id)

if err != nil {

fmt.Println(err)

return idInt

}

return idInt

}),

The pulumi.IntArray declaration is expecting an Array of Pulumi-typed Integers (e.g. pulumi.Int, pulumi.IntOutput). Supplying only droplet_id will as it is of type pulumi.StringOutput. Instead we take the droplet_id variable, and use the Pulumi ApplyInt function against it. This will return a pulumi.IntOutput type as long as the the function running returns an Integer.

The function itself has an input of id string (i.e. the value of droplet_id), and returns an int (Integer). We use the strconv package to convert the String to an Int. We need to define the var idInt int variable as we must return an integer, even if the conversion failed. Defining the integer idInt means that the return value is always an Int, it just may be a zero-value Int.

So long as the string conversion succeeded, we return the ID of the droplet as an integer, satisfying the requirement in the pulumi.IntArray.

Once you have created a few of these Apply functions, they become much easier to understand. The first few times though, you may need to play around with it, and even try and make it fail to see what is happening.

In regards to the firewalls and rules themselves, currently you cannot create an “any protocol” rule. Instead, we must define three separate rules for TCP, UDP and ICMP. Also, if you specify TCP or UDP, a range of ports is also required, even if this is all ports. The rules can apply to both both IPv4 and IPv6, which can reduce some duplication of code.

Running Pulumi

We can now run pulumi up and see our Droplet build: -

$ Previewing update (staging)

View Live: https://app.pulumi.com/yetiops/do-go/staging/previews/59056b53-d392-4474-9c17-b9c1529de066

Type Name Plan

+ pulumi:pulumi:Stack do-go-staging create

+ ├─ digitalocean:index:SshKey yetiops-prom create

+ ├─ digitalocean:index:Tag prometheus create

+ ├─ digitalocean:index:Tag node_exporter create

+ ├─ digitalocean:index:Droplet yetiops-prom create

+ └─ digitalocean:index:Firewall yetiops-prom create

Resources:

+ 6 to create

Do you want to perform this update? yes

Updating (staging)

View Live: https://app.pulumi.com/yetiops/do-go/staging/updates/5

Type Name Status

+ pulumi:pulumi:Stack do-go-staging created

+ ├─ digitalocean:index:Tag node_exporter created

+ ├─ digitalocean:index:SshKey yetiops-prom created

+ ├─ digitalocean:index:Tag prometheus created

+ ├─ digitalocean:index:Droplet yetiops-prom created

+ └─ digitalocean:index:Firewall yetiops-prom created

Outputs:

publicIp: "68.183.219.18"

Resources:

+ 6 created

Duration: 57s

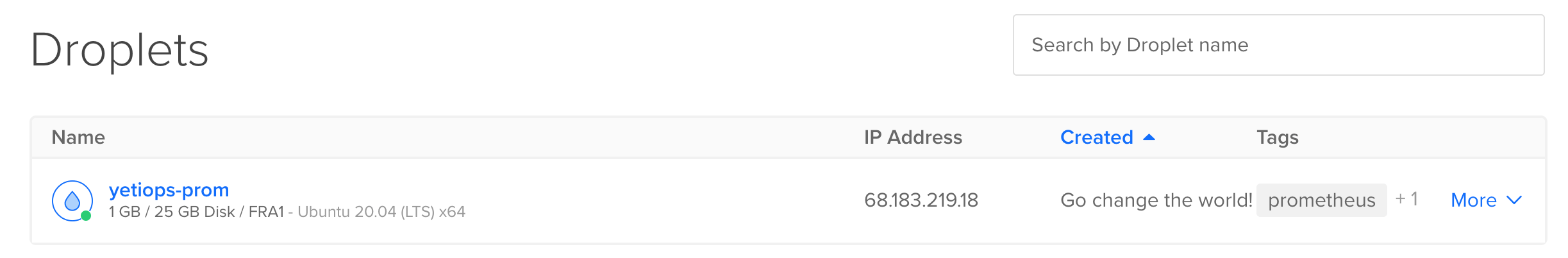

We can also see this in the Digital Ocean console: -

Lets login to it and see what has been deployed: -

$ ssh -i ~/.ssh/id_rsa [email protected]

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-51-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information disabled due to load higher than 1.0

136 updates can be installed immediately.

64 of these updates are security updates.

To see these additional updates run: apt list --upgradable

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

root@yetiops-prom:~# ss -tlunp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

udp UNCONN 0 0 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=492,fd=12))

tcp LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=492,fd=13))

tcp LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=776,fd=3))

tcp LISTEN 0 4096 *:9100 *:* users:(("prometheus-node",pid=2277,fd=3))

tcp LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=776,fd=4))

root@yetiops-prom:~# curl localhost:9100/metrics | grep -i yetiops

node_uname_info{domainname="(none)",machine="x86_64",nodename="yetiops-prom",release="5.4.0-51-generic",sysname="Linux",version="#56-Ubuntu SMP Mon Oct 5 14:28:49 UTC 2020"} 1

We’re in, the node_exporter is installed, and all is looking good. Note that unlike in AWS, the SSH keys we create apply to the root account, rather than the ubuntu account. In fact, no ubuntu account exists: -

root@yetiops-prom:~# groups ubuntu

groups: ‘ubuntu’: no such user

root@yetiops-prom:~#

All the code

The below is the full main.go file with our code in: -

package main

import (

"fmt"

"io/ioutil"

"net/http"

"os/user"

"strconv"

"strings"

"github.com/pulumi/pulumi-cloudinit/sdk/go/cloudinit"

"github.com/pulumi/pulumi-digitalocean/sdk/v3/go/digitalocean"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi/config"

)

func getMyIp() (string, error) {

resp, err := http.Get("https://ifconfig.co")

if err != nil {

return "", err

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

return "", err

}

MyIp := strings.TrimSuffix(string(body), "\n")

return MyIp, nil

}

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := digitalocean.NewSshKey(ctx, commonName, &digitalocean.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

myIp, err := getMyIp()

if err != nil {

return err

}

myIpCidr := fmt.Sprintf("%v/32", myIp)

promtag, err := digitalocean.NewTag(ctx, "prometheus", &digitalocean.TagArgs{

Name: pulumi.String("prometheus"),

})

if err != nil {

return err

}

nodeex_tag, err := digitalocean.NewTag(ctx, "node_exporter", &digitalocean.TagArgs{

Name: pulumi.String("node_exporter"),

})

if err != nil {

return err

}

droplet, err := digitalocean.NewDroplet(ctx, commonName, &digitalocean.DropletArgs{

Name: pulumi.String(commonName),

Image: pulumi.String("ubuntu-20-04-x64"),

Region: pulumi.String("fra1"),

Size: pulumi.String("s-1vcpu-1gb"),

UserData: pulumi.String(cloudconfig.Rendered),

SshKeys: pulumi.StringArray{

pulumi.StringOutput(sshkey.Fingerprint),

},

Tags: pulumi.StringArray{

promtag.ID(),

nodeex_tag.ID(),

},

})

droplet_id := pulumi.Sprintf("%v", droplet.ID())

_, err = digitalocean.NewFirewall(ctx, commonName, &digitalocean.FirewallArgs{

Name: pulumi.String(commonName),

DropletIds: pulumi.IntArray{

droplet_id.ApplyInt(func(id string) int {

var idInt int

idInt, err := strconv.Atoi(id)

if err != nil {

fmt.Println(err)

return idInt

}

return idInt

}),

},

InboundRules: digitalocean.FirewallInboundRuleArray{

digitalocean.FirewallInboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("22"),

SourceAddresses: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

digitalocean.FirewallInboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("9100"),

SourceAddresses: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

},

OutboundRules: digitalocean.FirewallOutboundRuleArray{

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("tcp"),

PortRange: pulumi.String("1-65535"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("udp"),

PortRange: pulumi.String("1-65535"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

digitalocean.FirewallOutboundRuleArgs{

Protocol: pulumi.String("icmp"),

DestinationAddresses: pulumi.StringArray{

pulumi.String("0.0.0.0/0"),

pulumi.String("::/0"),

},

},

},

})

ctx.Export("publicIp", droplet.Ipv4Address)

return nil

})

}

Hetzner

I have covered Hetzner’s Cloud offering in the past, around the time that Prometheus Service Discovery supported them as a provider. As with Digital Ocean, if you follow all the instructions in that post until you have an API key, you can then choose whether to expose it as an environment variable or as part of your stack’s configuration.

Project Initialization

To create the stack, the Pulumi CLI does not have any existing examples/skeleton structure to create a project. The process is a little more manual. Start with your directory of choice, and then run the following: -

$ pulumi stack init

Please enter your desired stack name.

To create a stack in an organization, use the format <org-name>/<stack-name> (e.g. `acmecorp/dev`).

stack name: (dev) yetiops/hetzner-go

Create a Pulumi.yaml file with the following contents: -

name: hetzner-staging

runtime: go

description: Testing Hetzner with Pulumi

You can change the name (this is the project name, not the stack name) and the description to whatever is relevant to the project. Ensure that the runtime value matches the code you are writing (i.e. if you are writing in Python, change it to python rather than leaving it as go).

Create a basic main.go file with the following: -

package main

import (

"fmt"

hcloud "github.com/pulumi/pulumi-hcloud/sdk/go/hcloud"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi/config"

)

func main() {

fmt.Println("test")

}

Finally, run go mod init hetzner-go (change hetzner-go to the directory name if it is different) and run go test. This will bring in all the required modules for creating Hetzner Cloud resources in Pulumi using Go.

If you want to store your Hetzner Cloud token in your configuration rather than as an environment variable, run pulumi config set hcloud:token XXXXXXXXXXXXXX --secret with the API token that you created. Otherwise, ensure you have an environment variable called HCLOUD_TOKEN exported for Pulumi to use.

Finally, we set our common_name variable with pulumi config set common_name yetiops-prom to be used when creating our resources.

The code

Similar to the Terraform-based post, we will create the following: -

- A Hetzner Cloud instance (VPS) running Ubuntu 20.04, with the Prometheus node_exporter installed (using cloud-config)

- An SSH key for our instance to use

- A firewall to limit what can and cannot communicate with our instance

As mentioned already, Hetzner now support creation of firewalls for Cloud instances, which were not available when writing the original Hetzner post.

The config and cloudinit sections are identical to what we create in Digital Ocean: -

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

As before, we set the cloud_init_path variable with pulumi config set cloud_init_path "files/ubuntu.tpl" so that we can change the path and contents of the template at a later date if we choose, without changing the code itself.

Defining an SSH key is nearly identical with the Digital Ocean approach: -

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := hcloud.NewSshKey(ctx, commonName, &hcloud.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

Other than slightly different names for the hcloud functions, there is no difference. This demonstrates how it would be make sense to define central functions that these files call, with only the provider specific details defined in the Pulumi stacks.

Again, we use our GetMyIp function to retrieve our IP to use in the firewall: -

func getMyIp() (string, error) {

resp, err := http.Get("https://ifconfig.co")

if err != nil {

return "", err

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

return "", err

}

MyIp := strings.TrimSuffix(string(body), "\n")

return MyIp, nil

}

[...]

myIp, err := getMyIp()

if err != nil {

return err

}

myIpCidr := fmt.Sprintf("%v/32", myIp)

firewall, err := hcloud.NewFirewall(ctx, commonName, &hcloud.FirewallArgs{

Name: pulumi.String(commonName),

Rules: hcloud.FirewallRuleArray{

hcloud.FirewallRuleArgs{

Direction: pulumi.String("in"),

Port: pulumi.String("22"),

Protocol: pulumi.String("tcp"),

SourceIps: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

hcloud.FirewallRuleArgs{

Direction: pulumi.String("in"),

Port: pulumi.String("9100"),

Protocol: pulumi.String("tcp"),

SourceIps: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

},

})

One point to note here is that the rules currently only support the in direction, meaning no outbound rules can be created. This will presumably available as an option in the future, with the Hetzner Firewalls still being classed as a beta feature.

Finally, we define our instance: -

firewall_id := pulumi.Sprintf("%v", firewall.ID())

srv, err := hcloud.NewServer(ctx, commonName, &hcloud.ServerArgs{

Name: pulumi.String(commonName),

Image: pulumi.String("ubuntu-20.04"),

ServerType: pulumi.String("cx11"),

Labels: pulumi.Map{

"prometheus": pulumi.String("true"),

"node_exporter": pulumi.String("true"),

},

UserData: pulumi.String(cloudconfig.Rendered),

SshKeys: pulumi.StringArray{

sshkey.Name,

},

FirewallIds: pulumi.IntArray{

firewall_id.ApplyInt(func(id string) int {

var idInt int

idInt, err := strconv.Atoi(id)

if err != nil {

fmt.Println(err)

return idInt

}

return idInt

}),

},

})

if err != nil {

return err

}

As with the Digital Ocean Droplet exposing it’s ID as a type of pulumi.IDOutput, but needing an integer for the FirewallIds array, we use an Apply function to achieve this. We turn the ID into a pulumi.StringOutput type, and then use an ApplyInt function to turn it into a pulumi.IntOutput type.

Running Pulumi

We can now run pulumi up and see our instance build: -

$ pulumi up

Previewing update (staging)

View Live: https://app.pulumi.com/yetiops/hetzner-staging/staging/previews/c72a380e-4be2-47ba-966f-06f5dccfbf32

Type Name Plan

+ pulumi:pulumi:Stack hetzner-staging-staging create

+ ├─ hcloud:index:SshKey yetiops-prom create

+ ├─ hcloud:index:Firewall yetiops-prom create

+ └─ hcloud:index:Server yetiops-prom create

Resources:

+ 4 to create

Do you want to perform this update? yes

Updating (staging)

View Live: https://app.pulumi.com/yetiops/hetzner-staging/staging/updates/15

Type Name Status

+ pulumi:pulumi:Stack hetzner-staging-staging created

+ ├─ hcloud:index:Firewall yetiops-prom created

+ ├─ hcloud:index:SshKey yetiops-prom created

+ └─ hcloud:index:Server yetiops-prom created

Outputs:

publicIP: "95.217.17.28"

Resources:

+ 4 created

Duration: 17s

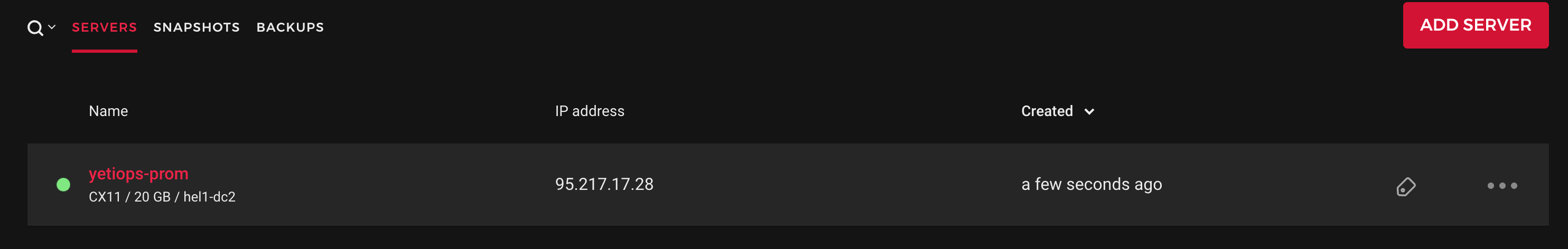

We can also see this in the Hetzner console: -

Now to login and see what has been deployed: -

$ ssh -i ~/.ssh/id_rsa [email protected]

Welcome to Ubuntu 20.04.2 LTS (GNU/Linux 5.4.0-66-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

root@yetiops-prom:~# ss -tlunp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

udp UNCONN 0 0 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=402,fd=12))

udp UNCONN 0 0 95.217.17.28%eth0:68 0.0.0.0:* users:(("systemd-network",pid=400,fd=19))

tcp LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=402,fd=13))

tcp LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=576,fd=3))

tcp LISTEN 0 4096 *:9100 *:* users:(("prometheus-node",pid=1199,fd=3))

tcp LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=576,fd=4))

root@yetiops-prom:~# curl localhost:9100/metrics | grep -i yeti

node_uname_info{domainname="(none)",machine="x86_64",nodename="yetiops-prom",release="5.4.0-66-generic",sysname="Linux",version="#74-Ubuntu SMP Wed Jan 27 22:54:38 UTC 2021"} 1

As with the Digital Ocean Droplet, we login as root, and the ubuntu user does not exist. All looks good!

All the code

The below is the full main.go file with our code in: -

package main

import (

"fmt"

"io/ioutil"

"net/http"

"os/user"

"strconv"

"strings"

"github.com/pulumi/pulumi-cloudinit/sdk/go/cloudinit"

hcloud "github.com/pulumi/pulumi-hcloud/sdk/go/hcloud"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi/config"

)

func getMyIp() (string, error) {

resp, err := http.Get("https://ifconfig.co")

if err != nil {

return "", err

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

return "", err

}

MyIp := strings.TrimSuffix(string(body), "\n")

return MyIp, nil

}

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := hcloud.NewSshKey(ctx, commonName, &hcloud.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

myIp, err := getMyIp()

if err != nil {

return err

}

myIpCidr := fmt.Sprintf("%v/32", myIp)

firewall, err := hcloud.NewFirewall(ctx, commonName, &hcloud.FirewallArgs{

Name: pulumi.String(commonName),

Rules: hcloud.FirewallRuleArray{

hcloud.FirewallRuleArgs{

Direction: pulumi.String("in"),

Port: pulumi.String("22"),

Protocol: pulumi.String("tcp"),

SourceIps: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

hcloud.FirewallRuleArgs{

Direction: pulumi.String("in"),

Port: pulumi.String("9100"),

Protocol: pulumi.String("tcp"),

SourceIps: pulumi.StringArray{

pulumi.String(myIpCidr),

},

},

},

})

firewall_id := pulumi.Sprintf("%v", firewall.ID())

srv, err := hcloud.NewServer(ctx, commonName, &hcloud.ServerArgs{

Name: pulumi.String(commonName),

Image: pulumi.String("ubuntu-20.04"),

ServerType: pulumi.String("cx11"),

Labels: pulumi.Map{

"prometheus": pulumi.String("true"),

"node_exporter": pulumi.String("true"),

},

UserData: pulumi.String(cloudconfig.Rendered),

SshKeys: pulumi.StringArray{

sshkey.Name,

},

FirewallIds: pulumi.IntArray{

firewall_id.ApplyInt(func(id string) int {

var idInt int

idInt, err := strconv.Atoi(id)

if err != nil {

fmt.Println(err)

return idInt

}

return idInt

}),

},

})

if err != nil {

return err

}

ctx.Export("publicIP", srv.Ipv4Address)

return nil

})

}

Equinix Metal

Equinix Metal, previously known as Packet, take a different approach to a lot of other providers. Most providers predominantly provide virtual machines as their base unit of compute. Some do offer bare metal as an option, but this is more of an option than as standard.

Equinix Metal instead offer bare metal as their base unit of compute. This means if you deploy a server instance, you will be provisioned a dedicated bare metal server. The cost is reflective of this, meaning you are usually talking dollars per hour rather than cents per hour, but you are not sharing compute resources (even at a hypervisor level) with other users.

Signing up

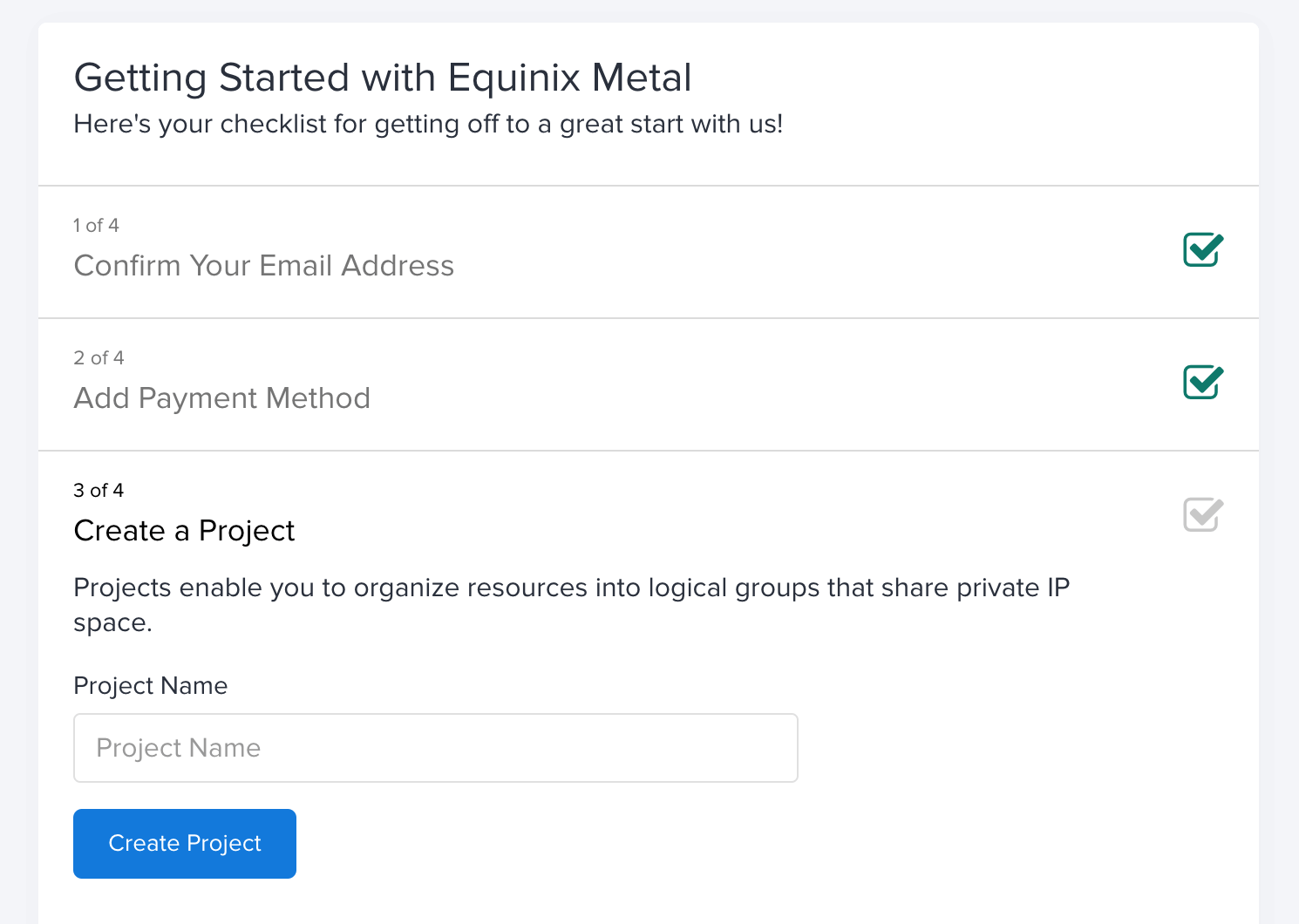

To sign up for an account with Equinix Metal, go to the Registration Page, supply all the required details, and you will be able to start an account. You will be prompted to create an Organization (this doesn’t have to be a registered business) and then you will be prompted to create a project (effectively a grouping of resources for an application/project/purpose).

You can create a project here, or you can define this within Pulumi

Generate an API key

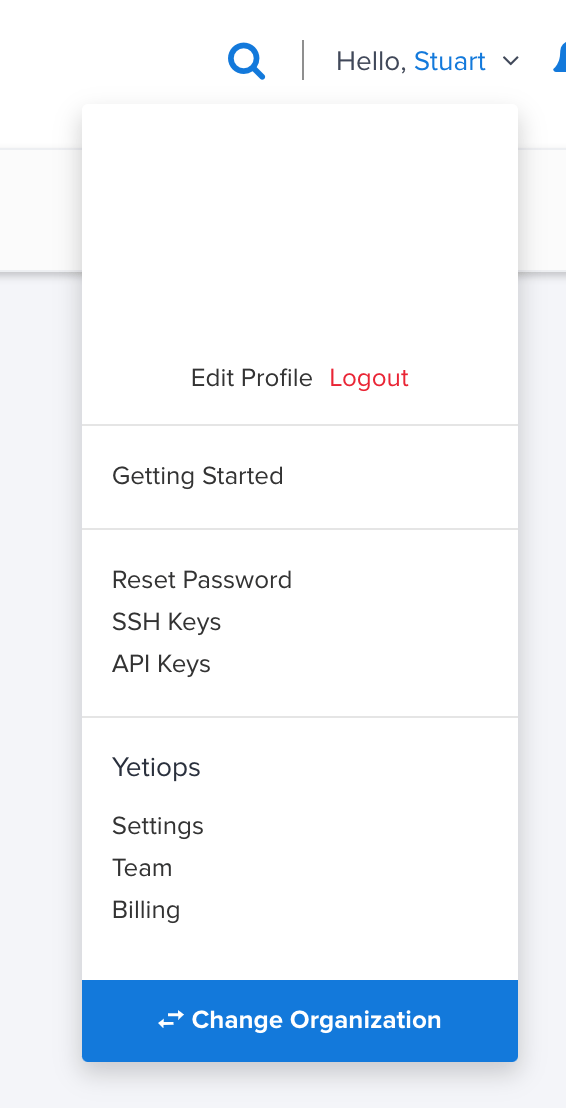

Like the other providers, you will need an API key to use Pulumi with Equinix Metal. Once you have created your account, go to the menu at the top right and select API Keys: -

In here, create an API key and name it (use a name that denotes what it is being used for, e.g. pulumi-api-key).

Now we can create our stack and use this API key.

Creating the stack and project

To create the stack and project, create a folder and run pulumi new equinix-metal-go --name basic-metal. Once this is done, you can define your API key as configuration using: -

$ pulumi config set equinix-metal:authToken XXXXXXXXXXXXXX --secret

Alternatively, you can expose it as an environment variable using export PACKET_AUTH_TOKEN=XXXXXXXXXXXXXX.

We then run pulumi config set common_name yetiops-prom to use across our resources, and now we can create some code!

The code

With Equinix Metal, we are going to create the following: -

- A project to create our resources in

- A bare metal server running Ubuntu 20.04 with the Prometheus

node_exporterinstalled - An SSH key to login to the server with

Rather than creating a server directly though, we will use what a Spot Market request. Like AWS, Equinix Metal provide a Spot Market that makes available spare capacity (unused servers) at a cheaper price, with the understanding that if an On Demand (i.e. a standard server creation) or Reserved (i.e. a server that will be dedicated to a customer) request is made that requires this capacity, your usage of that server will be terminated to allow Equinix Metal to fulfil this request.

A practical application of this approach is using servers for jobs that are stateless/ephemeral (e.g. batch jobs that just need workers to churn through data, rather than a 24/7 available service). If your worker jobs are not time sensitive, then this allows a lot more compute capacity at a lower price, with the understanding that it is not guaranteed.

For our purposes, it just means we can create a server to demonstrate Pulumi without it costing too much!

Equinix Metal does not currently offer a firewall-like product that would be in front of your server(s), so you will need to deploy host-based firewalling. You can build BGP networks and Layer 2 networks though, so it is perfectly possible to build a Linux/FreeBSD-based firewall to sit in front of your instances, and have them all connect as if they are in a switched network behind the firewall(s).

As with the other providers, we define the config elements and cloudinit resource in the same way: -

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

As noted previously, the cloud_init_path configuration allows us to make changes to the file externally without updating the code itself.

After this, we create an SSH key resource, in much the same way as Digital Ocean and Hetzner: -

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := metal.NewSshKey(ctx, commonName, &metal.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

After this, we define our project: -

project, err := metal.NewProject(ctx, "my-project", &metal.ProjectArgs{

Name: pulumi.String("yetiops-blog"),

})

if err != nil {

return err

}

This project is the grouping of our resources (similar to an Azure Resource Group, or a GCP Project).

Finally, we create the Spot Request. This requests a server based upon some instance parameters. We also specify the maximum cost we want to pay per server, as well as how many of them we want to provision: -

project_id := pulumi.Sprintf("%v", project.ID())

_, err = metal.NewSpotMarketRequest(ctx, commonName, &metal.SpotMarketRequestArgs{

ProjectId: project_id,

MaxBidPrice: pulumi.Float64(0.20),

Facilities: pulumi.StringArray{

pulumi.String("fr2"),

},

DevicesMin: pulumi.Int(1),

DevicesMax: pulumi.Int(1),

InstanceParameters: metal.SpotMarketRequestInstanceParametersArgs{

Hostname: pulumi.String(commonName),

BillingCycle: pulumi.String("hourly"),

OperatingSystem: pulumi.String("ubuntu_20_04"),

Plan: pulumi.String("c3.small.x86"),

UserSshKeys: pulumi.StringArray{

pulumi.StringOutput(sshkey.OwnerId),

},

Userdata: pulumi.String(cloudconfig.Rendered),

},

})

Similar to before, we need to turn the Project ID into a StringOutput rather than an IDOutput. We don’t need to use Apply functions here, instead the pulumi.Sprintf function provides a variable of type StringOutput that we can use directly.

Besides this, the main parts to we configure are setting a MaxBidPrice (i.e. the most we want to pay for a server), what facility (similar to regions) we want to deploy our instance(s) in, and what our maximum and minimum amount of devices is (in this case, both 1). This provides us with a bare metal server to manage.

Running Pulumi

We can now run pulumi up and get ourselves a bare metal server: -

$ pulumi up

Previewing update (staging)

View Live: https://app.pulumi.com/yetiops/basic-metal/staging/previews/347cd557-8dd7-4188-9cf8-9eb5a78f3fd6

Type Name Plan

+ pulumi:pulumi:Stack basic-metal-staging create

+ ├─ equinix-metal:index:SshKey yetiops-prom create

+ ├─ equinix-metal:index:Project my-project create

+ └─ equinix-metal:index:SpotMarketRequest yetiops-prom create

Resources:

+ 4 to create

Do you want to perform this update? yes

Updating (staging)

View Live: https://app.pulumi.com/yetiops/basic-metal/staging/updates/11

Type Name Status Info

+ pulumi:pulumi:Stack basic-metal-staging created 7 messages

+ ├─ equinix-metal:index:SshKey yetiops-prom created

+ ├─ equinix-metal:index:Project my-project created

+ └─ equinix-metal:index:SpotMarketRequest yetiops-prom created

Diagnostics:

pulumi:pulumi:Stack (basic-metal-staging):

2021/04/12 12:47:12 [DEBUG] POST https://api.equinix.com/metal/v1/ssh-keys

2021/04/12 12:47:13 [DEBUG] POST https://api.equinix.com/metal/v1/projects

2021/04/12 12:47:14 [DEBUG] GET https://api.equinix.com/metal/v1/projects/079c6885-ad49-4141-9977-6988ef97f2e2?

2021/04/12 12:47:14 [DEBUG] GET https://api.equinix.com/metal/v1/ssh-keys/7fed9543-61c8-4bb3-81c9-bdd29512e96e?

2021/04/12 12:47:14 [DEBUG] GET https://api.equinix.com/metal/v1/projects/079c6885-ad49-4141-9977-6988ef97f2e2/bgp-config?

2021/04/12 12:47:16 [DEBUG] POST https://api.equinix.com/metal/v1/projects/079c6885-ad49-4141-9977-6988ef97f2e2/spot-market-requests?include=devices,project,plan

2021/04/12 12:47:17 [DEBUG] GET https://api.equinix.com/metal/v1/spot-market-requests/1ba255af-2e97-4a62-8cdc-bd018c8883d9?include=project%2Cdevices%2Cfacilities

Outputs:

projectName: "yetiops-blog"

Resources:

+ 4 created

Duration: 10s

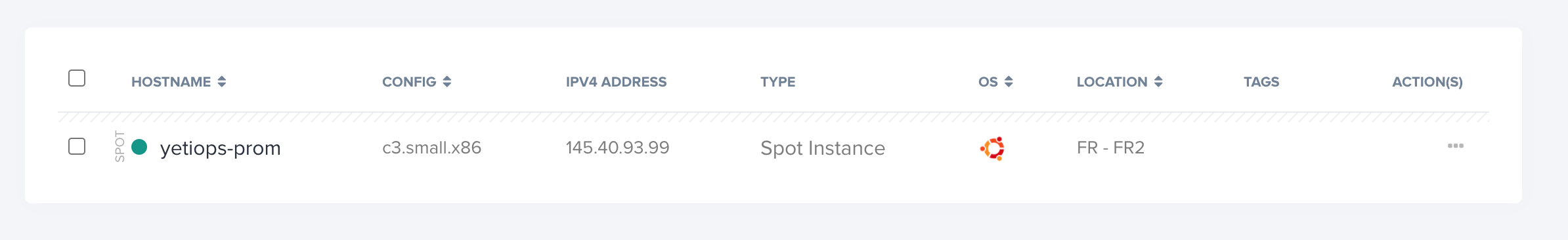

We can also see this in the Equinix Metal console: -

Lets login and see if it works as expected: -

$ ssh -i ~/.ssh/id_rsa [email protected]

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-52-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

This system has been minimized by removing packages and content that are

not required on a system that users do not log into.

To restore this content, you can run the 'unminimize' command.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

root@yetiops-prom:~#

root@yetiops-prom:~#

root@yetiops-prom:~#

root@yetiops-prom:~#

root@yetiops-prom:~# ss -tlunp

Netid State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

udp UNCONN 0 0 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=897,fd=12))

udp UNCONN 0 0 10.25.19.129:123 0.0.0.0:* users:(("ntpd",pid=1119,fd=20))

udp UNCONN 0 0 145.40.93.99:123 0.0.0.0:* users:(("ntpd",pid=1119,fd=19))

udp UNCONN 0 0 127.0.0.1:123 0.0.0.0:* users:(("ntpd",pid=1119,fd=18))

udp UNCONN 0 0 0.0.0.0:123 0.0.0.0:* users:(("ntpd",pid=1119,fd=17))

udp UNCONN 0 0 [fe80::63f:72ff:fed4:749c]%bond0:123 [::]:* users:(("ntpd",pid=1119,fd=23))

udp UNCONN 0 0 [2604:1380:4091:1400::1]:123 [::]:* users:(("ntpd",pid=1119,fd=22))

udp UNCONN 0 0 [::1]:123 [::]:* users:(("ntpd",pid=1119,fd=21))

udp UNCONN 0 0 [::]:123 [::]:* users:(("ntpd",pid=1119,fd=16))

tcp LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:* users:(("systemd-resolve",pid=897,fd=13))

tcp LISTEN 0 128 0.0.0.0:22 0.0.0.0:* users:(("sshd",pid=1197,fd=3))

tcp LISTEN 0 4096 *:9100 *:* users:(("prometheus-node",pid=1753,fd=3))

tcp LISTEN 0 128 [::]:22 [::]:* users:(("sshd",pid=1197,fd=4))

root@yetiops-prom:~# curl localhost:9100/metrics | grep -i yeti

node_uname_info{domainname="(none)",machine="x86_64",nodename="yetiops-prom",release="5.4.0-52-generic",sysname="Linux",version="#57-Ubuntu SMP Thu Oct 15 10:57:00 UTC 2020"} 1

root@yetiops-prom:~# cat /proc/cpuinfo | grep -i processor

processor : 0

processor : 1

processor : 2

processor : 3

processor : 4

processor : 5

processor : 6

processor : 7

processor : 8

processor : 9

processor : 10

processor : 11

processor : 12

processor : 13

processor : 14

processor : 15

root@yetiops-prom:~# free -mh

total used free shared buff/cache available

Mem: 31Gi 598Mi 30Gi 17Mi 256Mi 30Gi

Swap: 1.9Gi 0B 1.9Gi

The joy of bare metal is that we now have 16 cores to play with and 32Gi of memory, and we don’t have to share it with anyone else!

All the code

The below is the full main.go file with our code in: -

package main

import (

"fmt"

"io/ioutil"

"os/user"

"github.com/pulumi/pulumi-cloudinit/sdk/go/cloudinit"

metal "github.com/pulumi/pulumi-equinix-metal/sdk/go/equinix"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi/config"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cloudInitPath := conf.Require("cloud_init_path")

cloudInitScript, err := ioutil.ReadFile(cloudInitPath)

if err != nil {

return err

}

cloudInitContents := string(cloudInitScript)

b64encEnable := false

gzipEnable := false

contentType := "text/cloud-config"

fileName := "init.cfg"

cloudconfig, err := cloudinit.LookupConfig(ctx, &cloudinit.LookupConfigArgs{

Base64Encode: &b64encEnable,

Gzip: &gzipEnable,

Parts: []cloudinit.GetConfigPart{

cloudinit.GetConfigPart{

Content: cloudInitContents,

ContentType: &contentType,

Filename: &fileName,

},

},

}, nil)

if err != nil {

return err

}

user, err := user.Current()

if err != nil {

return err

}

sshkey_path := fmt.Sprintf("%v/.ssh/id_rsa.pub", user.HomeDir)

sshkey_file, err := ioutil.ReadFile(sshkey_path)

if err != nil {

return err

}

sshkey_contents := string(sshkey_file)

sshkey, err := metal.NewSshKey(ctx, commonName, &metal.SshKeyArgs{

Name: pulumi.String(commonName),

PublicKey: pulumi.String(sshkey_contents),

})

project, err := metal.NewProject(ctx, "my-project", &metal.ProjectArgs{

Name: pulumi.String("yetiops-blog"),

})

if err != nil {

return err

}

project_id := pulumi.Sprintf("%v", project.ID())

_, err = metal.NewSpotMarketRequest(ctx, commonName, &metal.SpotMarketRequestArgs{

// ProjectId: project_id.ApplyString(func(id string) string {

// return id

// }),

ProjectId: project_id,

MaxBidPrice: pulumi.Float64(0.20),

Facilities: pulumi.StringArray{

pulumi.String("fr2"),

},

DevicesMin: pulumi.Int(1),

DevicesMax: pulumi.Int(1),

InstanceParameters: metal.SpotMarketRequestInstanceParametersArgs{

Hostname: pulumi.String(commonName),

BillingCycle: pulumi.String("hourly"),

OperatingSystem: pulumi.String("ubuntu_20_04"),

Plan: pulumi.String("c3.small.x86"),

UserSshKeys: pulumi.StringArray{

pulumi.StringOutput(sshkey.OwnerId),

},

Userdata: pulumi.String(cloudconfig.Rendered),

},

})

ctx.Export("projectName", project.Name)

return nil

})

}

Linode

Linode are a provider who have been around since the early 2000s, focussing primarily on Linux instances and the supporting infrastructure for them. This includes LoadBalancers (NodeBalancers in Linode terminology), Firewalls, Object Storage and more.

They also offer the Linode Kubernetes Engine which provides a managed Kubernetes cluster that can be sized to your requirements. To see one in action, you can watch/listen to the Changelog Podcast performing updates/inducing some chaos engineering on their cluster: -

The full podcast that this video is from is available here.

So far we have shown multiple providers (AWS, Digital Ocean, Hetzner, Equinix Metal) but all building some form of machine (virtual or dedicated) running the Prometheus node_exporter. This time we are going to build an LKE Kubernetes cluster and run a basic Deployment on it. We won’t expose the Pods publicly, instead we are more concerned with the interaction between the Linode and Kubernetes Pulumi providers.

Signing up

First, you need to sign up with Linode. Go to their Sign Up page and provide your details (name, email, billing information). You’ll then be presented with the Linode console: -

Generate an API key

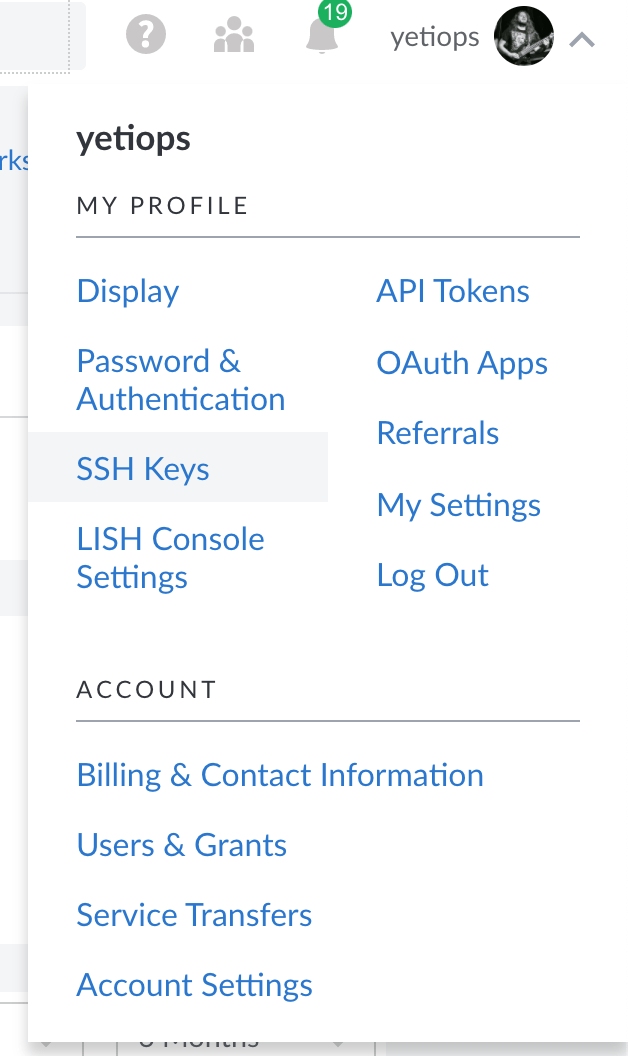

To create a Linode API key, go to the top right of the interface, click on the dropdown over your account and choose API Tokens: -

You can then create a Personal Access Token for use with Pulumi.

Create the stack and project

You can now create your project using pulumi new linode-go --name lke-go. Either set the API token as part of your configuration with pulumi config set linode:token XXXXXXXXXXXXXX --secret or export it as export LINODE_TOKEN=XXXXXXXXXXXXXX.

As with all the other providers, we’ll also add pulumi config set common_name yetiops-prom for use across our resources.

The code

As noted, rather than creating a VPS, this time we’re going to: -

- Create an LKE Kubernetes Cluster, running Kubernetes v1.20

- This will have 3 nodes of size

g6-standard-1(2Gb memory, 1 vCPU)

- This will have 3 nodes of size

- We will also add a generic NGINX deployment with a single replica, using the

kubeconfigexported from the LKE cluster

First, we define our configuration values: -

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

This is the same as the other providers, except we don’t provide any cloudinit or similar because Linode takes care of the Kubernetes-specific installation/application settings.

Next, we build the LKE cluster: -

cluster, err := linode.NewLkeCluster(ctx, commonName, &linode.LkeClusterArgs{

K8sVersion: pulumi.String("1.20"),

Label: pulumi.String(commonName),

Pools: linode.LkeClusterPoolArray{

&linode.LkeClusterPoolArgs{

Count: pulumi.Int(3),

Type: pulumi.String("g6-standard-1"),

},

},

Region: pulumi.String("eu-west"),

Tags: pulumi.StringArray{

pulumi.String("staging"),

pulumi.String("lke-yetiops"),

},

})

if err != nil {

return err

}

One of the benefits of using a managed Kubernetes cluster is that there is very little configuration required to set a cluster up. In addition we add a couple of tags, specify that the cluster will be built in the eu-west region, and then build it.

The next section takes the generated kubeconfig from the cluster, and makes it available for use with the Pulumi Kubernetes provider: -

lke_config := cluster.Kubeconfig.ApplyString(func(kconf string) string {

output, _ := b64.StdEncoding.DecodeString(kconf)

return string(output)

})

kubeProvider, err := kubernetes.NewProvider(ctx, commonName, &kubernetes.ProviderArgs{

Kubeconfig: lke_config,

}, pulumi.DependsOn([]pulumi.Resource{cluster}))

if err != nil {

return err

}

There are two parts to this. The first is another Apply function. This is because the kubeconfig output from the cluster is in base64 format, which needs turning into a string for the Pulumi Kubernetes provider to use. There are other ways of generating a usable kubeconfig (a good example is here), but in this case the LKE cluster output is already in the correct format (once decoded).

After that, we create a Kubernetes provider, which has a depends upon the cluster being created. The DependsOn function is similar to Terraform’s depends_on, in that Pulumi will not try to create this resource until the resource it depends upon is created first.

For every resource we want to add to the Kubernetes cluster, we’ll reference this provider so that it uses the correct configuration. Without this, Pulumi follow the defaults that kubectl and similar tools use, either the configuration referenced in a $KUBE_CONFIG environment variable, or the ~/.kube/config file, if one exists.

Next, we create an app label resource which can be used to add additional labels to anything we create: -

appLabels := pulumi.StringMap{

"app": pulumi.String("nginx"),

}

Any labels added to this will be present in all of your other resources that reference it.

Next, we create a namespace: -

namespace, err := corev1.NewNamespace(ctx, "app-ns", &corev1.NamespaceArgs{

Metadata: &metav1.ObjectMetaArgs{

Name: pulumi.String(commonName),

},

}, pulumi.Provider(kubeProvider))

if err != nil {

return err

}

As you can see, we have attached our provider here, which means that it will use the provider specific to our LKE cluster.

Finally, we create our NGINX deployment, using the provider, namespace and labels we created, and deploy it into our cluster: -

deployment, err := appsv1.NewDeployment(ctx, "app-dep", &appsv1.DeploymentArgs{

Metadata: &metav1.ObjectMetaArgs{

Namespace: namespace.Metadata.Elem().Name(),

},

Spec: appsv1.DeploymentSpecArgs{

Selector: &metav1.LabelSelectorArgs{

MatchLabels: appLabels,

},

Replicas: pulumi.Int(1),

Template: &corev1.PodTemplateSpecArgs{

Metadata: &metav1.ObjectMetaArgs{

Labels: appLabels,

},

Spec: &corev1.PodSpecArgs{

Containers: corev1.ContainerArray{

corev1.ContainerArgs{

Name: pulumi.String("nginx"),

Image: pulumi.String("nginx"),

}},

},

},

},

}, pulumi.Provider(kubeProvider))

If you aren’t familiar with Kubernetes, this will run a single NGINX container in our cluster. This Deployment will manage rollouts, and also if the container dies at any point it will get replaced with an another running NGINX. We specify how many containers we want to run using Replicas.

We also reference our appLabels and namespace, meaning the application will be labelled correctly and will also be deployed into the namespace we created.

Finally, we export a couple of values: -

ctx.Export("name", deployment.Metadata.Elem().Name())

ctx.Export("kubeConfig", pulumi.Unsecret(lke_config))

The first is just the name of the deployment. The second is our kubeconfig. We need to use the pulumi.Unsecret function because by default the kubeconfig output is a secret. This means that it won’t be exposed into stdout, meaning it wouldn’t be seen in in logs for the process running Pulumi (e.g. a CI/CD system). In our case though, we want to output it unencrypted it so that we can inspect the cluster ourselves.

Running Pulumi

Now we can run Pulumi, see if we get an LKE cluster, and if our application is deployed: -

Previewing update (staging)

View Live: https://app.pulumi.com/yetiops/lke-go/staging/previews/0d68a411-ca47-49e0-a63a-e54cd1c278bd

Type Name Plan

+ pulumi:pulumi:Stack lke-go-staging create

+ ├─ linode:index:LkeCluster yetiops-prom create

+ ├─ pulumi:providers:kubernetes yetiops-prom create

+ ├─ kubernetes:core/v1:Namespace app-ns create

+ └─ kubernetes:apps/v1:Deployment app-dep create

Resources:

+ 5 to create

Do you want to perform this update? yes

Updating (staging)

View Live: https://app.pulumi.com/yetiops/lke-go/staging/updates/10

Type Name Status

+ pulumi:pulumi:Stack lke-go-staging created

+ ├─ linode:index:LkeCluster yetiops-prom created

+ ├─ pulumi:providers:kubernetes yetiops-prom created

+ ├─ kubernetes:core/v1:Namespace app-ns created

+ └─ kubernetes:apps/v1:Deployment app-dep created

Outputs:

kubeConfig: "### KUBECONFIG OUTPUT ###"

name : "app-dep-nvxmwgxw"

Resources:

+ 5 created

Duration: 4m4s

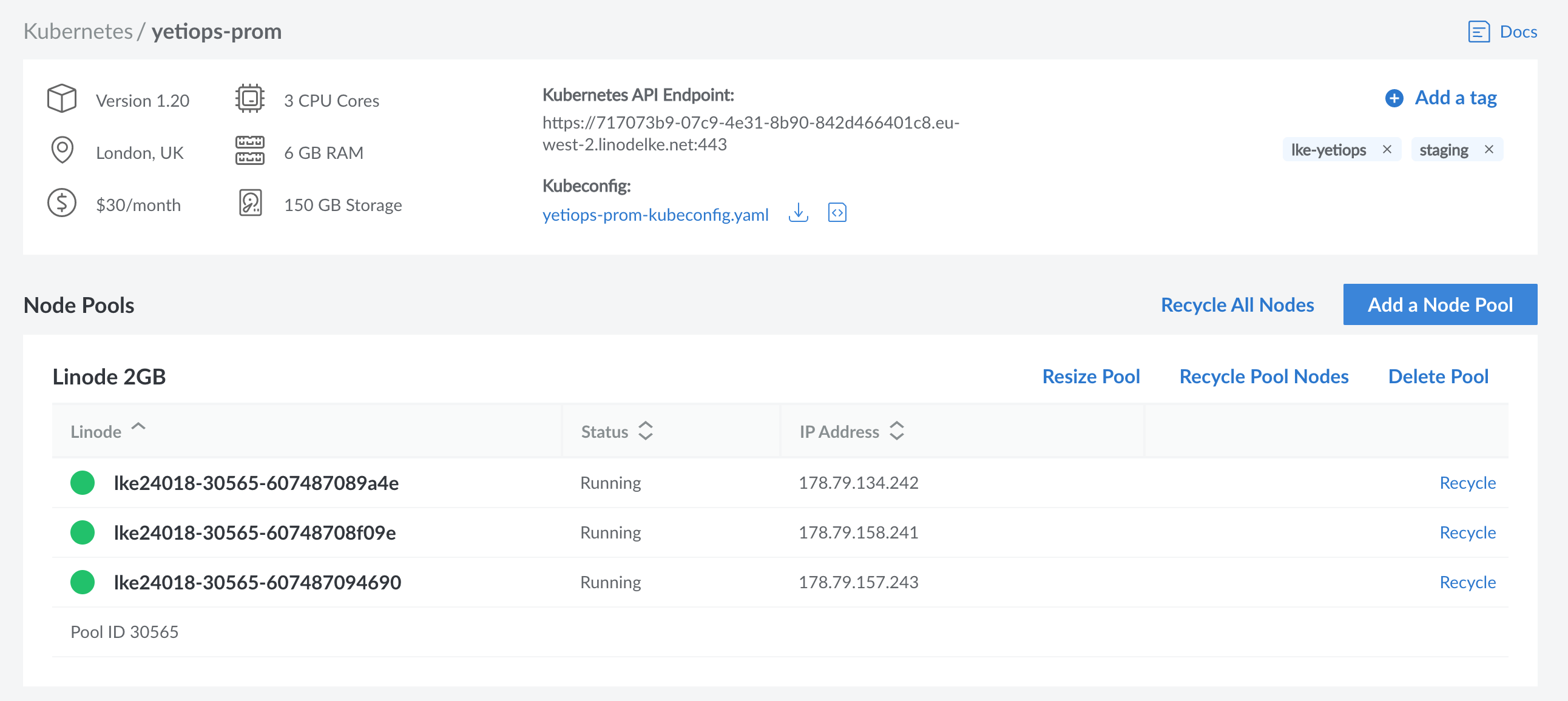

We can see that our cluster was created in the Linode console: -

If we take the output of kubeConfig and put it into our ~/.kube/config file, we can also check the cluster itself: -

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

lke24018-30565-607487089a4e Ready <none> 3m12s v1.20.5

lke24018-30565-60748708f09e Ready <none> 3m11s v1.20.5

lke24018-30565-607487094690 Ready <none> 3m9s v1.20.5

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

lke24018-30565-607487089a4e Ready <none> 3m27s v1.20.5 192.168.147.230 178.79.134.242 Debian GNU/Linux 9 (stretch) 5.10.0-5-cloud-amd64 docker://19.3.15

lke24018-30565-60748708f09e Ready <none> 3m26s v1.20.5 192.168.149.192 178.79.158.241 Debian GNU/Linux 9 (stretch) 5.10.0-5-cloud-amd64 docker://19.3.15

lke24018-30565-607487094690 Ready <none> 3m24s v1.20.5 192.168.164.66 178.79.157.243 Debian GNU/Linux 9 (stretch) 5.10.0-5-cloud-amd64 docker://19.3.15

$ kubectl get deployments -A

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

kube-system calico-kube-controllers 1/1 1 1 6m15s

kube-system coredns 2/2 2 2 6m13s

yetiops-prom app-dep-nvxmwgxw 1/1 1 1 5m43s

$ kubectl describe deployments app-dep-nvxmwgxw -n yetiops-prom

Name: app-dep-nvxmwgxw

Namespace: yetiops-prom

CreationTimestamp: Mon, 12 Apr 2021 18:45:54 +0100

Labels: app.kubernetes.io/managed-by=pulumi

Annotations: deployment.kubernetes.io/revision: 1

pulumi.com/autonamed: true

Selector: app=nginx

Replicas: 1 desired | 1 updated | 1 total | 1 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: app-dep-nvxmwgxw-6799fc88d8 (1/1 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 6m9s deployment-controller Scaled up replica set app-dep-nvxmwgxw-6799fc88d8 to 1

$ kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-b9575d85c-m5zvm 1/1 Running 1 5m19s

kube-system calico-node-7mrgk 1/1 Running 0 3m49s

kube-system calico-node-r58mj 1/1 Running 0 3m51s

kube-system calico-node-skxz5 1/1 Running 0 3m52s

kube-system coredns-5f8bfcb47-9xqmb 1/1 Running 0 5m19s

kube-system coredns-5f8bfcb47-sgrdp 1/1 Running 0 5m19s

kube-system csi-linode-controller-0 4/4 Running 0 5m19s

kube-system csi-linode-node-8wxk4 2/2 Running 0 3m8s

kube-system csi-linode-node-jmbtr 2/2 Running 0 3m10s

kube-system csi-linode-node-p2l7v 2/2 Running 0 2m58s

kube-system kube-proxy-5v8v8 1/1 Running 0 3m51s

kube-system kube-proxy-nbwrx 1/1 Running 0 3m49s

kube-system kube-proxy-q547g 1/1 Running 0 3m52s

yetiops-prom app-dep-nvxmwgxw-6799fc88d8-n2x2w 1/1 Running 0 5m3s

Lets exec into the container as well: -

$ kubectl exec -n yetiops-prom -it app-dep-nvxmwgxw-6799fc88d8-n2x2w -- /bin/bash

root@app-dep-nvxmwgxw-6799fc88d8-n2x2w:/# cat /etc/os-release

PRETTY_NAME="Debian GNU/Linux 10 (buster)"

NAME="Debian GNU/Linux"

VERSION_ID="10"

VERSION="10 (buster)"

VERSION_CODENAME=buster

ID=debian

HOME_URL="https://www.debian.org/"

SUPPORT_URL="https://www.debian.org/support"

BUG_REPORT_URL="https://bugs.debian.org/"

root@app-dep-nvxmwgxw-6799fc88d8-n2x2w:/# curl localhost:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

root@app-dep-nvxmwgxw-6799fc88d8-n2x2w:/# curl -k https://kubernetes.default.svc:443

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {

},

"status": "Failure",

"message": "forbidden: User \"system:anonymous\" cannot get path \"/\"",

"reason": "Forbidden",

"details": {

},

"code": 403

All looking good!

All the code

The following is the full main.go file with all of our code: -

package main

import (

b64 "encoding/base64"

"github.com/pulumi/pulumi-kubernetes/sdk/v2/go/kubernetes"

appsv1 "github.com/pulumi/pulumi-kubernetes/sdk/v2/go/kubernetes/apps/v1"

corev1 "github.com/pulumi/pulumi-kubernetes/sdk/v2/go/kubernetes/core/v1"

metav1 "github.com/pulumi/pulumi-kubernetes/sdk/v2/go/kubernetes/meta/v1"

"github.com/pulumi/pulumi-linode/sdk/v2/go/linode"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi"

"github.com/pulumi/pulumi/sdk/v2/go/pulumi/config"

)

func main() {

pulumi.Run(func(ctx *pulumi.Context) error {

conf := config.New(ctx, "")

commonName := conf.Require("common_name")

cluster, err := linode.NewLkeCluster(ctx, commonName, &linode.LkeClusterArgs{

K8sVersion: pulumi.String("1.20"),

Label: pulumi.String(commonName),

Pools: linode.LkeClusterPoolArray{

&linode.LkeClusterPoolArgs{

Count: pulumi.Int(3),

Type: pulumi.String("g6-standard-1"),

},

},

Region: pulumi.String("eu-west"),

Tags: pulumi.StringArray{

pulumi.String("staging"),

pulumi.String("lke-yetiops"),

},

})

if err != nil {

return err

}

lke_config := cluster.Kubeconfig.ApplyString(func(kconf string) string {

output, _ := b64.StdEncoding.DecodeString(kconf)

return string(output)

})

kubeProvider, err := kubernetes.NewProvider(ctx, commonName, &kubernetes.ProviderArgs{

Kubeconfig: lke_config,

}, pulumi.DependsOn([]pulumi.Resource{cluster}))

if err != nil {

return err

}

appLabels := pulumi.StringMap{

"app": pulumi.String("nginx"),

}

namespace, err := corev1.NewNamespace(ctx, "app-ns", &corev1.NamespaceArgs{

Metadata: &metav1.ObjectMetaArgs{

Name: pulumi.String(commonName),

},

}, pulumi.Provider(kubeProvider))

if err != nil {

return err

}

deployment, err := appsv1.NewDeployment(ctx, "app-dep", &appsv1.DeploymentArgs{

Metadata: &metav1.ObjectMetaArgs{

Namespace: namespace.Metadata.Elem().Name(),

},

Spec: appsv1.DeploymentSpecArgs{

Selector: &metav1.LabelSelectorArgs{

MatchLabels: appLabels,

},

Replicas: pulumi.Int(1),

Template: &corev1.PodTemplateSpecArgs{

Metadata: &metav1.ObjectMetaArgs{

Labels: appLabels,

},

Spec: &corev1.PodSpecArgs{

Containers: corev1.ContainerArray{

corev1.ContainerArgs{

Name: pulumi.String("nginx"),

Image: pulumi.String("nginx"),

}},

},

},

},

}, pulumi.Provider(kubeProvider))

if err != nil {

return err

}

ctx.Export("name", deployment.Metadata.Elem().Name())

ctx.Export("kubeConfig", pulumi.Unsecret(lke_config))

return nil

})

}

Summary

In the previous post we discovered that Pulumi is a very powerful Infrastructure-as-Code tool. From being able to choose from any one of five (at the time of speaking) languages to define your infrastructure, to defining your own functions as part of the code, Pulumi offers a great deal of flexibility when creating and codifying your infrastructure.

Now we also can see that isn’t limited to just the big few cloud providers. Even with some of the smaller or more esoteric providers, there is still a way to define your infrastructure with Pulumi.

What this also means is that if you want to use another provider for either cost reasons, a different approach (e.g. Equinix Metal with their bare metal offering) or other reasons, you don’t have to throw out all of your tooling to use it. Using tools like Pulumi and Terraform offer a migration path without a complete refactor.

pulumi terraform golang digital ocean hetzner linode equinix metal

7404 Words

2021-04-12 20:50 +0000