21 minutes

DNS Anycast: Using BGP for DNS High-Availability

DNS has a number of mechanisms for redundancy and high availability. More often than not, clients will have a primary and secondary nameserver to talk to. However, if the primary nameserver fails for whatever reason, then the queries to the primary usually need to timeout before attempting queries to the secondary.

Also the speed of general web browsing can often be dictated by how long it takes to receive a valid DNS response to the query. If you are going to multiple sites one after the other, then you are likely to need to wait briefly while DNS does its thing.

To get around this, there is a mechanism known as Anycast. This allows multiple servers to use the same IP, and then routing takes care of which server to go to. This has a couple of notable benefits: -

- Requests to an Anycast IP are not dependant on the availability of a single server

- Requests can be forwarded to the “closest” server with the Anycast IP

The term “closest” means shortest in terms of routing. You might find that the “closest” in terms of how a packet is routed is not physically the closest server with said IP.

Typically though, providers who serve DNS requests (e.g. Google’s 8.8.8.8, CloudFlare’s 1.1.1.1) will have enough presence internationally to place DNS servers close to the users.

BGP

The routing protocol most often used for Anycast (and for routing on the Internet generally) is the Border Gateway Protocol (or BGP). For those who do not know, a routing protocol is used to dynamically advertise and receive routes between neighbouring devices. BGP is one such protocol.

I won’t go into an in-depth discussion about BGP, but if you would like to know more about it, I would refer you to the Beginner’s Guide to Understanding BGP.

Anycast IP?

The Wikipedia definition of Anycast is as such: -

Anycast is a network addressing and routing methodology in which a single destination address has multiple routing paths to two or more endpoint destinations.

Routers will select the desired path on the basis of number of hops, distance, lowest cost, latency measurements or based on the least congested route.

An Anycast IP is no different from any other IP address. They are not allocated from a specific range like multicast (224.0.0.0/4).

What makes an IP anycast is it being configured on multiple servers and using a routing protocol to advertise it. Technically you could also do this with static routes (rather than a routing protocol), but I wouldn’t advise it!

How does it work?

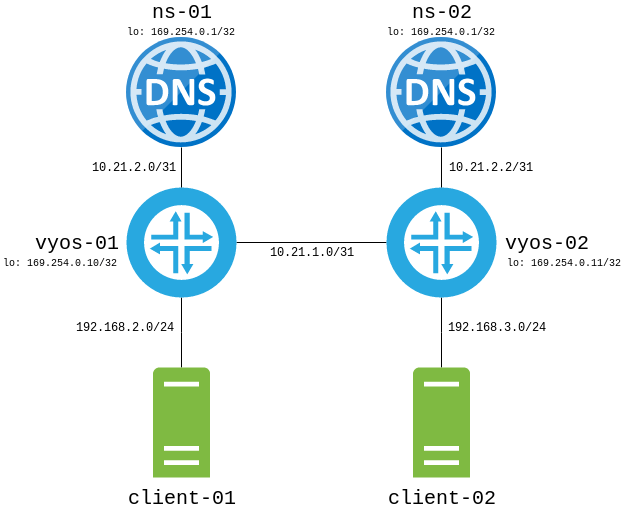

To demonstrate Anycast, I’m going to go through a lab with: -

- Two nameservers, one running BIND9, the other running Unbound

- Two client machines, configured to use the Anycast IP for DNS requests

- Two VyOS routers acting as a gateway to the client machines, and BGP peers to the nameservers

One of the main points to note is that to provide Anycast services, you need to run a routing protocol on the nameservers directly, not just on the routers. Without this, you are reliant on BGP timeouts or interfaces going down to see if a server has gone down.

The diagram below shows the setup: -

Nameserver Preparation

I chose to use BIND9 and Unbound, partly to show that the DNS software running doesn’t matter, but also because I had never used Unbound before. Both servers are running Debian Buster.

Install DNS Software

To install BIND9 in Debian, run sudo apt-get install bind9. After this is done, BIND9 should be running already: -

$ sudo systemctl status bind9

* bind9.service - BIND Domain Name Server

Loaded: loaded (/lib/systemd/system/bind9.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2019-12-02 10:34:10 GMT; 1 day 2h ago

Docs: man:named(8)

Process: 539 ExecStart=/usr/sbin/named $OPTIONS (code=exited, status=0/SUCCESS)

Main PID: 543 (named)

Tasks: 4 (limit: 453)

Memory: 20.2M

CGroup: /system.slice/bind9.service

`-543 /usr/sbin/named -u bind

Dec 02 10:34:34 ns-01 named[543]: configuring command channel from '/etc/bind/rndc.key'

Dec 02 10:34:34 ns-01 named[543]: reloading configuration succeeded

Dec 02 10:34:34 ns-01 named[543]: scheduled loading new zones

Dec 02 10:34:34 ns-01 named[543]: any newly configured zones are now loaded

Dec 02 10:34:34 ns-01 named[543]: running

Dec 02 10:34:34 ns-01 named[543]: managed-keys-zone: Key 20326 for zone . acceptance timer complete: key now trusted

Dec 02 10:34:34 ns-01 named[543]: resolver priming query complete

Dec 03 10:34:34 ns-01 named[543]: _default: sending trust-anchor-telemetry query '_ta-4f66/NULL'

Dec 03 10:34:34 ns-01 named[543]: resolver priming query complete

Dec 03 10:34:34 ns-01 named[543]: managed-keys-zone: Key 20326 for zone . acceptance timer complete: key now trusted

I also tend to install dnsutils to give access to dig and other useful tools.

I have configured the following options for BIND, to ensure it responds to DNS requests for hosts not on its local subnet. This is configured in /etc/bind/named.conf.options: -

acl goodclients {

192.168.0.0/16;

localhost;

};

options {

directory "/var/cache/bind";

allow-query { goodclients; };

forwarders {

9.9.9.9;

};

dnssec-validation auto;

listen-on { any; };

listen-on-v6 { any; };

};

To check if this works, run dig yetiops.net @127.0.0.1: -

$ dig yetiops.net @127.0.0.1 12:38:31

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> yetiops.net @127.0.0.1

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 61410

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 13, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 91c9c41a84a8b5791d1c12705de658e7efbd7e6b847735de (good)

;; QUESTION SECTION:

;yetiops.net. IN A

;; ANSWER SECTION:

yetiops.net. 300 IN A 104.31.77.84

yetiops.net. 300 IN A 104.31.76.84

;; AUTHORITY SECTION:

. 25648 IN NS a.root-servers.net.

. 25648 IN NS c.root-servers.net.

. 25648 IN NS i.root-servers.net.

. 25648 IN NS d.root-servers.net.

. 25648 IN NS h.root-servers.net.

. 25648 IN NS f.root-servers.net.

. 25648 IN NS g.root-servers.net.

. 25648 IN NS l.root-servers.net.

. 25648 IN NS b.root-servers.net.

. 25648 IN NS m.root-servers.net.

. 25648 IN NS k.root-servers.net.

. 25648 IN NS j.root-servers.net.

. 25648 IN NS e.root-servers.net.

;; Query time: 95 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Tue Dec 03 12:45:27 GMT 2019

;; MSG SIZE rcvd: 308

To install unbound instead, do sudo apt-get install unbound instead. Again, it should start straight away once installed: -

$ sudo systemctl status unbound

* unbound.service - Unbound DNS server

Loaded: loaded (/lib/systemd/system/unbound.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2019-12-03 12:47:48 GMT; 1s ago

Docs: man:unbound(8)

Process: 4580 ExecStartPre=/usr/lib/unbound/package-helper chroot_setup (code=exited, status=0/SUCCESS)

Process: 4583 ExecStartPre=/usr/lib/unbound/package-helper root_trust_anchor_update (code=exited, status=0/SUCCESS)

Main PID: 4587 (unbound)

Tasks: 1 (limit: 453)

Memory: 6.4M

CGroup: /system.slice/unbound.service

`-4587 /usr/sbin/unbound -d

Dec 03 12:47:48 ns-02 systemd[1]: Starting Unbound DNS server...

Dec 03 12:47:48 ns-02 package-helper[4583]: /var/lib/unbound/root.key has content

Dec 03 12:47:48 ns-02 package-helper[4583]: success: the anchor is ok

Dec 03 12:47:48 ns-02 unbound[4587]: [4587:0] notice: init module 0: subnet

Dec 03 12:47:48 ns-02 unbound[4587]: [4587:0] notice: init module 1: validator

Dec 03 12:47:48 ns-02 unbound[4587]: [4587:0] notice: init module 2: iterator

Dec 03 12:47:48 ns-02 systemd[1]: Started Unbound DNS server.

Dec 03 12:47:48 ns-02 unbound[4587]: [4587:0] info: start of service (unbound 1.9.0).

The configuration for Unbound, using multiple forwarders, looks like the below: -

include: "/etc/unbound/unbound.conf.d/*.conf"

server:

access-control: 10.0.0.0/8 allow

access-control: 127.0.0.0/8 allow

access-control: 192.168.0.0/16 allow

aggressive-nsec: yes

cache-max-ttl: 14400

cache-min-ttl: 1200

hide-identity: yes

hide-version: yes

interface: 169.254.0.1

prefetch: yes

rrset-roundrobin: yes

use-caps-for-id: yes

verbosity: 1

forward-zone:

name: "."

forward-addr: 1.0.0.1@53#one.one.one.one

forward-addr: 1.1.1.1@53#one.one.one.one

forward-addr: 8.8.4.4@53#dns.google

forward-addr: 8.8.8.8@53#dns.google

forward-addr: 9.9.9.9@53#dns.quad9.net

forward-addr: 149.112.112.112@53#dns.quad9.net

This configuration was taken from this Unbound DNS Tutorial.

Again, testing should give a similar result: -

dig yetiops.net @169.254.0.1

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> yetiops.net @169.254.0.1

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 52738

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;yetiops.net. IN A

;; ANSWER SECTION:

yetiops.net. 1200 IN A 104.31.77.84

yetiops.net. 1200 IN A 104.31.76.84

;; Query time: 154 msec

;; SERVER: 169.254.0.1#53(169.254.0.1)

;; WHEN: Tue Dec 03 12:51:43 GMT 2019

;; MSG SIZE rcvd: 72

The reason for doing the tests to 169.254.0.1 is that Unbound appears to respond on the physical interface IP, rather than the interface the query was received upon. I shall do a follow up on Unbound when I have used it more, but for now this serves the purpose that we need.

Network interface configuration

The network interface configuration on Debian will require a “loopback” interface. Rather than applying the Anycast IP directly to a physical interface, it is applied to a logical interface instead (the loopback).

This has benefits, in that you can use multiple physical interfaces as links to multiple routers, but advertising the same anycast IP (rather than being tied to a physical interface). Also, it means that you only have to use a host route (i.e. a /32 IP address), and cut down on your IP address usage. If you are using private address space, this probably isn’t much of a concern, but public IPv4 addresses are scarce (IPv6 is another matter entirely, but most clients still talk IPv4).

To apply this configuration on a Debian machine, you will need to add it into /etc/network/interfaces like so: -

## The loopback network interface

auto lo

iface lo inet loopback

## The anycast IP

auto lo:1

iface lo:1 inet static

address 169.254.0.1/32

## The physical interface

auto eth2

iface eth2 inet static

address 10.21.2.1/31

The above is the configuration on ns-01. The configuration on ns-02 will be the same, except that the IP address of eth2 would be 10.21.2.3/31·

FRR

FRR, or Free Range Routing, is a notable fork of Quagga that provides a number of routing protocols (and other useful network protocols, like VRRP and LDP) on Linux. It also has the vtysh shell package, which allows you to configure, verify and monitor using very Cisco-like syntax.

To install on Debian or Ubuntu (or other Debian-like distributions), go to the FRR Debian Repository page. For other systems, please see the FRR documentation.

Once installed, the only changes I make are to enable the BGP daemon, and to add my user to the frr and frrvty groups. This allows me to administer FRR without requiring escalated privileges.

To enable the BGP daemon, open up /etc/frr/daemons, find the line which says bgpd=no, and change it to bgpd=yes. After reloading (systemctl reload frr), the BGP daemon should be available: -

* frr.service - FRRouting

Loaded: loaded (/lib/systemd/system/frr.service; enabled; vendor preset: enabled)

Active: active (running) since Mon 2019-12-02 10:34:09 GMT; 1 day 2h ago

Docs: https://frrouting.readthedocs.io/en/latest/setup.html

Process: 443 ExecStart=/usr/lib/frr/frrinit.sh start (code=exited, status=0/SUCCESS)

Process: 7115 ExecReload=/usr/lib/frr/frrinit.sh reload (code=exited, status=0/SUCCESS)

Tasks: 12 (limit: 453)

Memory: 26.0M

CGroup: /system.slice/frr.service

|- 531 /usr/lib/frr/zebra -d -A 127.0.0.1 -s 90000000

|- 536 /usr/lib/frr/staticd -d -A 127.0.0.1

|-7131 /usr/lib/frr/watchfrr -d zebra bgpd staticd

`-7140 /usr/lib/frr/bgpd -d -A 127.0.0.1

Dec 03 13:02:19 ns-01 watchfrr[7131]: [EC 268435457] bgpd state -> down : initial connection attempt failed

Dec 03 13:02:19 ns-01 watchfrr[7131]: staticd state -> up : connect succeeded

Dec 03 13:02:19 ns-01 watchfrr[7131]: [EC 100663303] Forked background command [pid 7132]: /usr/lib/frr/watchfrr.sh restart bgpd

Dec 03 13:02:19 ns-01 watchfrr.sh[7138]: Cannot stop bgpd: pid file not found

Dec 03 13:02:19 ns-01 zebra[531]: client 31 says hello and bids fair to announce only vnc routes vrf=0

Dec 03 13:02:19 ns-01 zebra[531]: client 28 says hello and bids fair to announce only bgp routes vrf=0

Dec 03 13:02:19 ns-01 watchfrr[7131]: bgpd state -> up : connect succeeded

Dec 03 13:02:19 ns-01 watchfrr[7131]: all daemons up, doing startup-complete notify

Dec 03 13:02:19 ns-01 frrinit.sh[7115]: Started watchfrr.

Dec 03 13:02:20 ns-01 systemd[1]: Reloaded FRRouting.

A couple of error messages appear, but this is because BGP is not already running when the reload is performed. After this, future reloads shouldn’t show the same.

After running sudo usermod -aG frr $MY-USER and sudo usermod -aG frrvty $MY-USER, I should now be able to access to the vtysh shell and start BGP: -

$ vtysh

Hello, this is FRRouting (version 7.2).

Copyright 1996-2005 Kunihiro Ishiguro, et al.

ns-01# conf t

ns-01(config)# router bgp 65001

ns-01(config-router)# exit

ns-01(config)# end

ns-01# show bgp summary

% No BGP neighbors found

No BGP neighbours were found, but none have been configured, so this is expected behaviour.

Nameserver Routing Protocol Configuration

To setup BGP between the Nameservers and the VyOS routers, you’ll need to choose some Autonomous System numbers (ASNs). The private ranges (i.e. those that anyone can use, and should never be seen on the public internet) are 64512-65534 (for 2-byte ASNs) and 4200000000-4294967294 (for 4-byte ASNs). I’m going to use both, to show that none of this is dependent on the type used.

- ns-01 - BGP ASN 64520

- ns-02 - BGP ASN 64530

- VyOS Routers - BGP ASN 4290001234

FRR

The following configuration will be applied via vtysh: -

ns-01

ns-01# conf t

ns-01(config)# router bgp 64520

ns-01(config-router)# neighbor 10.21.2.0 remote-as 4290001234

ns-01(config-router)# address-family ipv4 unicast

ns-01(config-router-af)# neighbor 10.21.2.0 activate

ns-01(config-router-af)# network 169.254.0.1/32

ns-01(config-router-af)# end

ns-01# wr mem

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Warning: /etc/frr/frr.conf.sav unlink failed

Integrated configuration saved to /etc/frr/frr.conf

[OK]

ns-02

ns-02# conf t

ns-02(config)# router bgp 64530

ns-02(config-router)# neighbor 10.21.2.2 remote-as 4290001234

ns-02(config-router)# address-family ipv4 unicast

ns-02(config-router-af)# neighbor 10.21.2.2 activate

ns-02(config-router-af)# network 169.254.0.1/32

ns-02(config-router-af)# end

ns-02# wr mem

Note: this version of vtysh never writes vtysh.conf

Building Configuration...

Warning: /etc/frr/frr.conf.sav unlink failed

Integrated configuration saved to /etc/frr/frr.conf

[OK]

For anyone who has configured a Cisco router, switch or similar, the syntax should be very familiar.

The main thing to notice is the network 169.254.0.1/32 statement. The same statement is configured on both Nameservers, because they are going to advertise the same IP (the Anycast IP). The network statement imports the route into BGP, and allows it to be advertised out to it’s peers.

VyOS BGP Configuration

VyOS configuration looks like a mixture of Juniper’s JunOS and Cisco’s IOS. It can look a little odd if you are heavily in either of the Cisco or Juniper camps, but it doesn’t take too long to get used to.

vyos-01

vyos@vyos-01:~$ configure

[edit]

vyos@vyos-01# set protocols bgp 4290001234 neighbor 10.21.2.1 remote-as 64520

[edit]

vyos@vyos-01# set protocols bgp 4290001234 neighbor 10.21.1.1 remote-as 4290001234

[edit]

vyos@vyos-01# set protocols bgp 4290001234 address-family ipv4-unicast network 192.168.2.0/24

[edit]

vyos@vyos-02# set protocols bgp 4290001234 address-family ipv4-unicast network 10.21.2.0/31

[edit]

vyos@vyos-01# commit

[edit]

vyos@vyos-01# save

Saving configuration to '/config/config.boot'...

Done

vyos-02

vyos@vyos-02:~$ configure

[edit]

vyos@vyos-02# set protocols bgp 4290001234 neighbor 10.21.2.3 remote-as 64530

[edit]

vyos@vyos-02# set protocols bgp 4290001234 neighbor 10.21.1.0 remote-as 4290001234

[edit]

vyos@vyos-02# set protocols bgp 4290001234 address-family ipv4-unicast network 192.168.3.0/24

[edit]

vyos@vyos-02# set protocols bgp 4290001234 address-family ipv4-unicast network 10.21.2.2/31

[edit]

vyos@vyos-02# commit

[edit]

vyos@vyos-02# save

Saving configuration to '/config/config.boot'...

Done

The configuration does not apply until you commit it (like JunOS and Cisco IOS-XR), and also if you do not save it, it will not be there on reboot.

The network statements are to ensure that the Nameservers know about the IP ranges of the clients.

Verification

Check Routing

After this, we should be able to see the Anycast IP appear in the routing tables of both VyOS routers: -

vyos-01

vyos@vyos-01:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 20, metric 0, best

Last update 00:07:47 ago

* 10.21.2.1, via eth3

vyos-02

vyos@vyos-02:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 20, metric 0, best

Last update 00:00:48 ago

* 10.21.2.3, via eth3

The last line on each route shows where it was received from. For vyos-01, this was received from 10.21.2.1 (the physical IP of ns-01). For vyos-02, this was received from 10.21.2.3 (the physical IP of ns-02).

This is the basis of Anycast, the same IP originating from multiple origins.

Test a DNS query

Testing DNS from the clients should show responses: -

client-01

$ dig www.google.com @169.254.0.1

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> www.google.com @169.254.0.1

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 52511

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 13, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 53c833de2eb0124a47b5c3195de8f2646dfe1769e95f3929 (good)

;; QUESTION SECTION:

;www.google.com. IN A

;; ANSWER SECTION:

www.google.com. 167 IN A 172.217.20.100

;; AUTHORITY SECTION:

. 21700 IN NS b.root-servers.net.

. 21700 IN NS g.root-servers.net.

. 21700 IN NS i.root-servers.net.

. 21700 IN NS j.root-servers.net.

. 21700 IN NS a.root-servers.net.

. 21700 IN NS m.root-servers.net.

. 21700 IN NS f.root-servers.net.

. 21700 IN NS e.root-servers.net.

. 21700 IN NS c.root-servers.net.

. 21700 IN NS h.root-servers.net.

. 21700 IN NS d.root-servers.net.

. 21700 IN NS k.root-servers.net.

. 21700 IN NS l.root-servers.net.

;; Query time: 11 msec

;; SERVER: 169.254.0.1#53(169.254.0.1)

;; WHEN: Thu Dec 05 12:04:52 GMT 2019

;; MSG SIZE rcvd: 298

client-02

$ dig www.google.com @169.254.0.1

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> www.google.com @169.254.0.1

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 44703

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.google.com. IN A

;; ANSWER SECTION:

www.google.com. 857 IN A 216.58.208.100

;; Query time: 1 msec

;; SERVER: 169.254.0.1#53(169.254.0.1)

;; WHEN: Thu Dec 05 12:06:18 GMT 2019

;; MSG SIZE rcvd: 59

Interestingly, we get different responses based upon whether we are hitting BIND (ns-01) or Unbound (ns-02), however they are running different forwarders so this would explain it.

How to prove that traffic is going to ns-01 or ns-02? tcpdump of course!

ns-01

$ tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

12:08:55.596445 IP 192.168.2.10.33692 > 169.254.0.1.domain: 6718+ [1au] A? www.google.com. (55)

12:08:55.609152 IP 169.254.0.1.domain > 192.168.2.10.33692: 6718 1/13/1 A 172.217.17.100 (298)

ns-02

$ tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

12:08:57.377725 IP 192.168.3.10.42406 > 169.254.0.1.domain: 55171+ [1au] A? www.google.com. (55)

12:08:57.392048 IP 169.254.0.1.domain > 192.168.3.10.42406: 55171 1/0/1 A 216.58.208.100 (59)

So as we can see, client-01 (which is in the 192.168.2.10 subnet) is getting a response from ns-01, whereas client-02 is getting a response from ns-02. The destination address of the requests is 169.254.0.1, but vyos-01 and vyos-02 have different routes for the IP address, therefore they arrive on different servers.

What if one server goes away?

We have already seen that DNS queries are being routed to the closest nameserver. In our scenario, this means that queries travel from the Client, to its connected router, and then to the nameserver connected to the same router.

What happens if say, the BGP peering failed to ns-01, or the server failed? Lets see!

ns-01

$ shutdown -h now

Now lets check the routing tables on vyos-01

vyos-01

vyos@vyos-01:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 200, metric 0, best

Last update 00:00:27 ago

10.21.2.3 (recursive)

* 10.21.1.1, via eth2

Now vyos-01 thinks that 169.254.0.1 is available via vyos-02. Lets run another packet capture on ns-02, and see if DNS queries from client-01 and client-02 reach it: -

ns-02

$ sudo tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

12:31:12.245274 IP 192.168.2.10.51313 > 169.254.0.1.domain: 60437+ [1au] A? www.google.com. (55)

12:31:12.245449 IP 169.254.0.1.domain > 192.168.2.10.51313: 60437 1/0/1 A 172.217.169.36 (59)

12:31:14.158140 IP 192.168.3.10.54927 > 169.254.0.1.domain: 40789+ [1au] A? www.google.com. (55)

12:31:14.158233 IP 169.254.0.1.domain > 192.168.3.10.54927: 40789 1/0/1 A 172.217.169.36 (59)

Success! We will no longer be waiting for DNS queries to timeout to the first nameserver the client attempts, instead routing to the next closest server.

What happens if the DNS software stops working?

Rather than shutting down the server, this time we will just take down BIND on ns-01

ns-01

$ sudo systemctl stop bind9

Lets test from client-01 and client-02

client-01

$ dig www.google.com @169.254.0.1

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> www.google.com @169.254.0.1

;; global options: +cmd

;; connection timed out; no servers could be reached

Well that isn’t good.

client-02

$ dig www.google.com @169.254.0.1

; <<>> DiG 9.11.5-P4-5.1-Debian <<>> www.google.com @169.254.0.1

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 24561

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.google.com. IN A

;; ANSWER SECTION:

www.google.com. 532 IN A 172.217.169.36

;; Query time: 1 msec

;; SERVER: 169.254.0.1#53(169.254.0.1)

;; WHEN: Thu Dec 05 12:35:29 GMT 2019

;; MSG SIZE rcvd: 59

client-02 still works though. Why is this?

FRR is a routing daemon, and is used to provide routing updates from servers (or Linux-based network hardware). It does not track the state of the applications running, and whether they are health or not. This isn’t a limitation of FRR, but merely what FRR is designed to do (or where you would typically use it).

If you are using FRR to provide connectivity to a machine over several Layer 3 links (rather than using LACP/bonded interfaces), FRR would shine here. It also can be used to provide unnumbered neighbour relationships, but this is a topic for another day.

How do we track the DNS software?

One of the best examples of a routing daemon that can also react to the application state is ExaBGP, written by Exa Networks.

What ExaBGP does is periodically runs a script, and checks the output of said script. This script could be a BASH one-liner, or it could be a full application that checks an API for responses, or anything in between.

It has an inbuilt healthcheck tool (useful for BASH one-liners) or you can check the results of STDOUT on running some form of script.

ExaBGP is written in Python, and can be installed using PIP: -

sudo pip3 install exabgp

Collecting exabgp

Downloading https://files.pythonhosted.org/packages/cf/34/41fc2017d6e61038079738dda32509dc40538f383489c84976807b4834ab/exabgp-4.1.2-py3-none-any.whl (557kB)

100% |████████████████████████████████| 563kB 2.7MB/s

Installing collected packages: exabgp

Successfully installed exabgp-4.1.2

First, I create a script to check the DNS response from the local server: -

##!/bin/bash

while true; do

/usr/bin/dig yetiops.net @169.254.0.1 > /dev/null;

if [[ $? != 0 ]]; then

echo "withdraw route 169.254.0.1 next-hop 10.21.2.1\n"

else

echo "announce route 169.254.0.1 next-hop 10.21.2.1\n"

fi

done

We are checking the output status of the command, and if it is anything other than 0, then we withdraw the route. If the command succeeds (i.e. output status of 0), then we will announce the route.

The ExaBGP configuration looks like the below: -

process announce-routes {

run /etc/exabgp/dns-check.sh;

encoder text;

}

neighbor 10.21.2.0 {

local-address 10.21.2.1;

local-as 64520;

peer-as 4290001234;

api {

processes [ announce-routes ];

}

}

So we are running a BGP peering session to 10.21.2.1 (i.e. vyos-01), and then running a process. The process in question is the script created previously, ExaBGP takes the results from it, and turns them into BGP messages.

In this case, we are doing simple route announcement and withdrawal (with a next-hop set). However you could also add other parameters like Local Preference or MED (Multi-Exit Discriminator)), extend the AS-Path, or apply BGP Communities. All of this is beyond the scope of this article (I’ll probably do a bit of a BGP deep dive in a future post).

To ensure ExaBGP runs as a service, the following SystemD unit file was created: -

[Unit]

Description=ExaBGP

After=network.target

ConditionPathExists=/etc/exabgp/exabgp.conf

[Service]

Environment=exabgp_daemon_daemonize=false

Environment=ETC=/etc

ExecStart=/usr/local/bin/exabgp /etc/exabgp/exabgp.conf

ExecReload=/bin/kill -USR1 $MAINPID

[Install]

WantedBy=multi-user.target

So now lets follow the same process as before.

Verification

Check routing

vyos-01

vyos@vyos-01:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 20, metric 0, best

Last update 00:00:17 ago

* 10.21.2.1, via eth3

vyos-02

vyos@vyos-02:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 20, metric 0, best

Last update 00:00:17 ago

* 10.21.2.3, via eth3

Packet captures

ns-01

$ sudo tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

13:26:28.835140 IP 192.168.2.10.57147 > 169.254.0.1.domain: 11108+ [1au] A? www.google.com. (55)

13:26:28.835546 IP 169.254.0.1.domain > 192.168.2.10.57147: 11108 1/13/1 A 172.217.19.196 (298)

ns-02

$ sudo tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

13:26:30.652179 IP 192.168.3.10.43694 > 169.254.0.1.domain: 52311+ [1au] A? www.google.com. (55)

13:26:30.672239 IP 169.254.0.1.domain > 192.168.3.10.43694: 52311 1/0/1 A 172.217.20.100 (59)

Taking down BIND

Lets take down BIND, and see if the routing changes at all: -

ns-01

$ sudo systemctl stop bind9

vyos-01

vyos@vyos-01:~$ show ip route 169.254.0.1

Routing entry for 169.254.0.1/32

Known via "bgp", distance 200, metric 0, best

Last update 00:00:25 ago

10.21.2.3 (recursive)

* 10.21.1.1, via eth2

Oh! It changed. Lets see what ExaBGP had to say: -

ns-01

$ sudo journalctl -xeu exabgp

Dec 05 13:32:04 ns-01 exabgp[13277]: 13:32:04 | 13277 | api | route added to neighbor 10.21.2.0 local-ip 10.21.2.1 local-as 64520 peer-as 4290001234 router-id 10.21.2.1 family-allowed in-open : 169.254.0.1/32 next-hop 10.21.2.1

Dec 05 13:32:24 ns-01 exabgp[13277]: 13:32:24 | 13277 | api | route removed from neighbor 10.21.2.0 local-ip 10.21.2.1 local-as 64520 peer-as 4290001234 router-id 10.21.2.1 family-allowed in-open : 169.254.0.1/32 next-hop 10.21.2.1

And lets prove it with a packet capture

ns-02

$ sudo tcpdump -i eth2 port 53

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth2, link-type EN10MB (Ethernet), capture size 262144 bytes

13:36:02.930962 IP 192.168.2.10.42220 > 169.254.0.1.domain: 2489+ [1au] A? www.google.com. (55)

13:36:02.931810 IP 169.254.0.1.domain > 192.168.2.10.42220: 2489 1/0/1 A 172.217.20.100 (59)

13:36:04.285632 IP 192.168.3.10.56140 > 169.254.0.1.domain: 57423+ [1au] A? www.google.com. (55)

13:36:04.285944 IP 169.254.0.1.domain > 192.168.3.10.56140: 57423 1/0/1 A 172.217.20.100 (59)

There we go, both clients made it!

Summary

There is a lot to process here, especially if you are new to BGP and Anycast. The main things to take away from it though are: -

- Anycast is just an IP that exists in multiple places

- It is not from a reserved range or anything similar

- Using a routing daemon (e.g. FRR) directly on a server is preferable to make it work

- Failover at a basic level can be achieved quite easily (i.e. server failure)

- To track application state, you need to look at something like ExaBGP

Hopefully this will help in understanding, and getting people to play with Anycast more. It can be used for just about anything you want to make highly available. UDP applications work best (due to their connectionless nature), but it is quite possible to use this for TCP. I have seen ExaBGP used to make a RabbitMQ cluster anycast, rather than using DNS or other forms of service discovery.